AI is no longer a futuristic experiment or something quietly running in the background. In 2026, it actively approves loans, flags fraud, diagnoses conditions, personalizes experiences, and makes real-time decisions that affect millions of users. Still, while AI capabilities move fast, the risks tied to unreliable, biased, or unstable behavior grow just as quickly. Testing AI applications has become one of the most critical safeguards between innovation and real-world failure.

Unlike traditional software, AI systems learn, adapt, and evolve, often in ways that are difficult to predict. A model that performs perfectly today can quietly degrade tomorrow under new data, a heavier load, or shifting user behavior. Today, we are going to explore how AI application testing truly works in practice: where it breaks, what must be validated, how teams manage long-term risk, and what we’ve learned firsthand from testing real AI-powered systems in production.

Why Is Testing AI Applications So Important?

Testing AI applications is no longer just a technical quality check — it has become a critical component for improving business performance, compliance, and trust. In 2026, AI applications influence approvals, recommendations, fraud detection, forecasting, and automated decision-making at an increasingly large scale. The nature of AI introduces probability, continuous learning, and behavior drift into the testing lifecycle, which makes classic software testing approaches insufficient on their own. This is why the need for testing AI applications today is driven first by risk control, not just convenience.

AI errors scale into business failures

Unlike traditional software defects, AI failures rarely stay isolated. Incorrect AI decisions can block legitimate users, approve fraudulent transactions, distort analytics, or trigger wrong automated actions across entire business flows. In many AI-powered applications, a model that passed early validation can silently degrade in production due to data drift or changing user behavior. This makes continuous testing essential to maintaining the accuracy of AI and the stability of the AI system’s performance.

AI introduces risks that traditional software never had

Traditional software follows fixed logic. AI systems learn from data, adapt over time, and produce probabilistic outcomes. As a result, testing AI applications must validate not only expected functionality, but also:

- How AI models react to shifting data patterns

- Whether test data still reflects real-world conditions

- How stable the predictions remain over time

This fundamental difference is why testing AI applications must combine unit testing, integration testing, performance testing, and security testing into a single, evolving testing process.

Regulatory pressure makes AI testing mandatory

In many industries, testing AI applications is now tied directly to compliance testing. Companies must demonstrate that their AI-based applications make explainable decisions, operate within defined accuracy limits, and can be audited. Without rigorous testing documentation, proving how AI decisions were produced becomes nearly impossible in regulated sectors such as finance, healthcare, and insurance.

Skipping AI testing becomes expensive fast

Teams that underestimate the effort to test AI applications effectively often face delayed defect detection, unreliable predictions, and escalating operational costs. High model accuracy during development does not guarantee stable behavior in production. This is why the role of testing in AI projects has shifted from simple validation to continuous business risk protection.

Don’t wait for bugs to be found by users — ensure a spotless UX with testing.

Different AI Models and How to Test Them

Not all AI models behave the same way in production, which means AI application testing must adapt to the specific risks of each model type. While the core principles of testing AI applications remain consistent, the testing approach, test scenarios, and test data priorities change significantly depending on how the AI system learns, predicts, or reacts to inputs. Here are the most common AI model categories used in real-world AI-based applications and what testing focuses on in each case.

Predictive and classification models (scoring, forecasting, risk engines)

These AI models drive decisions such as credit scoring, fraud detection, demand forecasting, and churn prediction. Errors here directly affect revenue and risk exposure.

What to test:

- Accuracy of AI against validated historical data

- Stability of predictions under changing input patterns

- Sensitivity to incomplete or noisy test data

- Performance of AI under peak inference load

In practice, AI model testing for predictive systems focuses on output consistency, decision thresholds, and long-term prediction decay. These models often pass early validation but degrade silently in production without continuous testing.

Recommendation engines (personalization, content, product matching)

Recommendation models power feeds, product suggestions, and personalization logic across many AI-powered applications.

What to test:

- Relevance and diversity of recommendations

- Coverage across different user segments

- Cold-start behavior with limited data

- Stability when preferences shift

For these systems, testing AI applications must validate not only mathematical accuracy, but also how the AI algorithms influence real user behavior and business KPIs.

Computer vision models (image, video, object detection)

Vision-based AI systems are widely used in quality control, security monitoring, medical imaging, and document processing.

What to test:

- Detection accuracy across real-world conditions

- Performance under poor lighting, occlusions, and noise

- Bias in recognition across object or demographic groups

- AI system’s performance under high-volume streams

Here, reliability testing and performance testing are just as important as the accuracy of AI, since visual conditions change constantly in production.

Speech recognition and voice AI models

Voice-based AI apps require high reliability in noisy, unpredictable environments.

What to test:

- Recognition accuracy across accents and speech speeds

- Performance in background noise

- Latency in real-time processing

- Integration testing with downstream AI-based systems

These AI models often behave well in controlled environments but reveal critical weaknesses under real operational conditions.

Anomaly and fraud detection models

Fraud detection AI systems operate under constantly changing attack patterns, making them some of the hardest AI models to validate.

What to test:

- Detection accuracy under new fraud patterns

- False positive and false negative balance

- Stability of decisions as transaction volumes grow

- AI system’s performance during sudden traffic spikes

Testing AI systems in this category relies heavily on synthetic data generation and continuous testing in production-like conditions.

Generative AI models (chatbots, text, image, and code generation)

Generative AI models power AI chatbot systems, copilots, and content generation tools. Unlike predictive models, they do not return fixed “correct” answers and introduce variability, creativity, and open-ended outputs into the testing process.

What to test:

- Output relevance and consistency across similar prompts

- Stability of responses under repeated queries

- Resistance to unsafe, biased, or misleading outputs

- Performance and latency under real user load

While testing generative AI follows a different validation logic than classical AI model testing, it still requires structured test scenarios, controlled test data, and clear acceptance criteria. A detailed breakdown of testing generative AI is covered in our separate dedicated guide.

70% bugs cut, CTR increased by 20%: Our latest AI model testing project

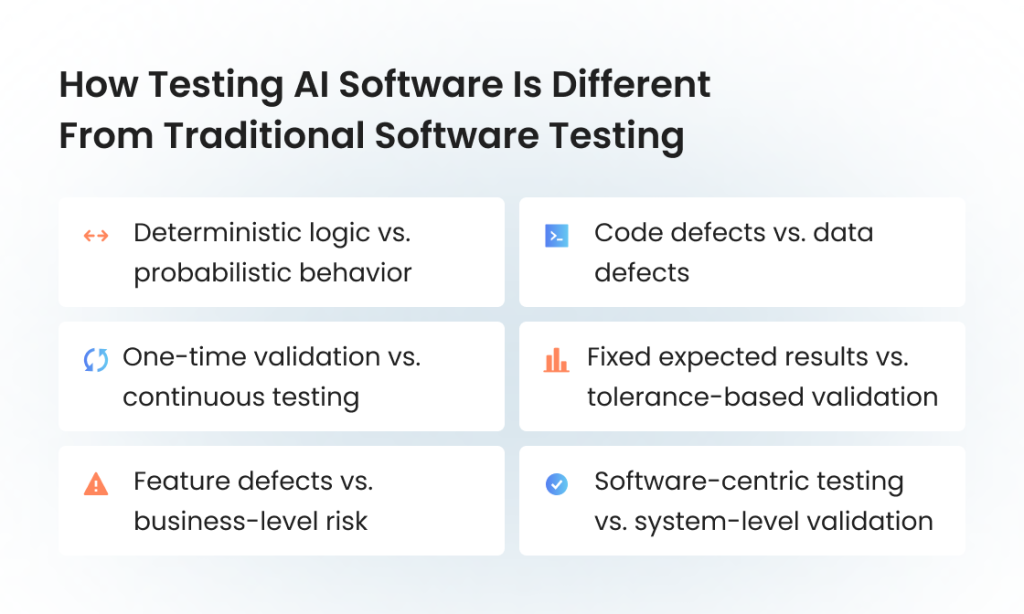

How Testing AI Software Is Different From Traditional Software Testing

Testing AI software may resemble classic app testing on the surface, but the nature of AI applications changes what quality actually means. Instead of validating fixed logic, teams must work with systems that evolve after release. Here are the key differences that reshape the entire testing process.

Deterministic logic vs. probabilistic behavior

In traditional software testing, the same input always produces the same output. In AI application testing, results are probabilistic. Instead of verifying one correct answer, teams validate confidence ranges, output stability, and behavior trends across large datasets.

Code defects vs. data defects

In classic software, most failures come from incorrect logic. In testing AI applications, many critical issues originate from test data: biased samples, poor labeling, outdated datasets, or training–production mismatches. Data becomes a primary test artifact, not just an input.

One-time validation vs. continuous testing

Traditional software testing is usually tied to releases. AI-based applications require continuous testing because model performance can degrade after deployment due to data drift, evolving user behavior, or environmental changes. A model that passed validation once may fail months later.

Fixed expected results vs. tolerance-based validation

Traditional test cases validate exact outputs. When teams test AI applications, expected results are often defined as acceptable ranges, statistical thresholds, or error margins rather than absolute values.

Feature defects vs. business-level risk

Classic software defects usually affect isolated features. AI failures directly impact AI decisions in pricing, fraud detection, approvals, recommendations, and forecasting. This shifts the role of testing from simple bug detection to business risk control.

Software-centric testing vs. system-level validation

Traditional testing focuses mainly on application logic. Testing AI systems must validate the full pipeline: data ingestion, model behavior, inference performance, integrations, and post-release monitoring within a single testing lifecycle.

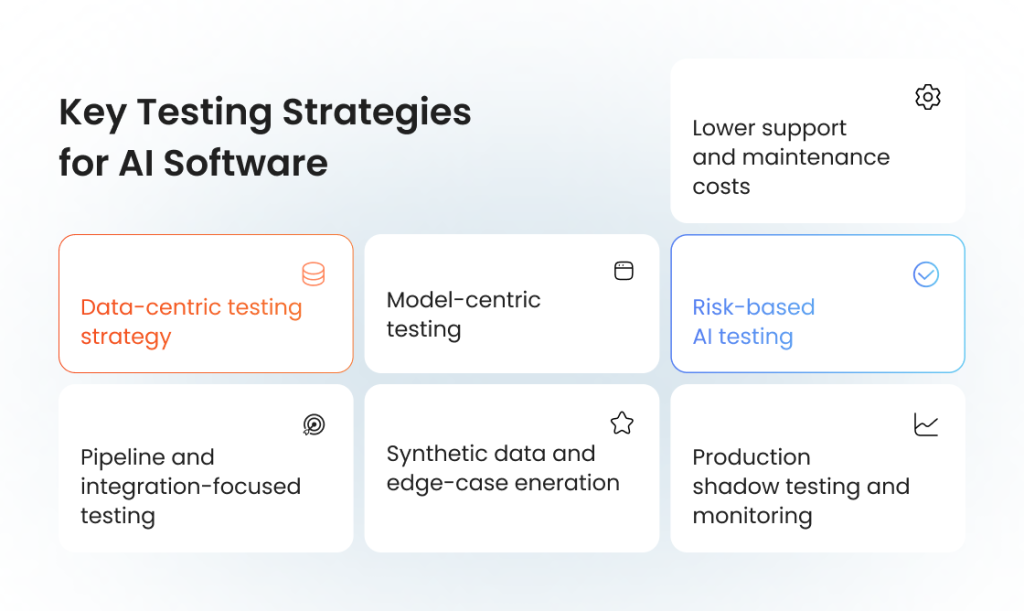

Key Testing Strategies for AI Software

Effective testing of AI applications requires more than applying standard software testing techniques to AI-based systems. Because AI models learn from data, adapt over time, and influence real business decisions, testing strategies must address uncertainty, change, and scale. These are the core testing strategies that make AI application testing reliable in production.

Data-centric testing strategy

In AI systems, data quality directly defines output quality. This strategy focuses on validating training, validation, and test data for completeness, balance, bias, and relevance. Teams test AI applications by checking how sensitive models are to missing values, noisy inputs, skewed distributions, and labeling errors. Without this layer, even well-designed AI models often fail in production.

Model-centric testing

This strategy focuses on direct AI model testing rather than only validating the surrounding application. It includes checking prediction accuracy, decision stability, threshold behavior, and performance of AI under different input conditions. Teams test your model across known scenarios and edge cases to ensure it behaves consistently within acceptable risk limits.

Risk-based AI testing

Not all AI decisions carry the same business impact. Risk-based testing prioritizes AI system areas where failures would cause the highest financial, legal, or safety damage, such as fraud detection, compliance scoring, medical analysis, or automated approvals. This approach helps define realistic test coverage instead of attempting to validate every possible outcome.

Pipeline and integration-focused testing

AI never works in isolation. Data pipelines, APIs, feature stores, and inference services must all function reliably for the AI-powered application to stay stable. Integration testing ensures data flows correctly between components, predictions are delivered on time, and downstream systems receive valid outputs. This is critical for production-grade AI-powered app testing.

Synthetic data and edge-case generation

Real-world AI failures often appear in rare scenarios that are poorly represented in historical datasets. Synthetic data generation allows teams to test AI applications against extreme, unexpected, or previously unseen conditions — fraud spikes, sensor failures, unusual user behavior, or corrupted inputs.

Production shadow testing and monitoring

Some AI risks cannot be fully detected before release. In shadow testing, models run in parallel with live systems without affecting real users. This allows teams to evaluate AI system’s performance, prediction stability, and failure patterns under real traffic before full rollout. Combined with continuous testing, this strategy helps detect degradation early.

Automation-assisted testing for scale

Modern AI application testing relies on test automation to continuously validate pipelines, retrain cycles, and prediction outputs. AI automation does not replace human validation but enables scalable regression testing across large datasets, frequent model updates, and complex testing lifecycles.

We will combine manual testing and automation to get your app where you want, fast.

AI App Testing Focus Areas: Common Risk Zones

When teams test AI applications, most critical failures originate not from UI defects but from deeper data, model, and infrastructure risks. Here are the primary risk zones that require targeted attention during AI app testing:

- Training and input data quality. Incomplete, biased, outdated, or poorly labeled test data directly distorts AI decisions and prediction accuracy.

- Data drift and concept drift. Real-world behavior changes over time, causing previously accurate AI models to degrade silently in production.

- Model stability and consistency. Small input changes can trigger unstable outputs if the AI model lacks decision accuracy.

- Bias, fairness, and explainability. AI-based applications must be validated for discriminatory behavior and audit-ready decision logic.

- Inference performance and scalability. Even accurate AI fails if latency spikes during real-time predictions or batch processing.

- Security and adversarial resistance. Malicious inputs, data poisoning, and model manipulation threaten the integrity of AI-powered applications.

- Integration and pipeline reliability. Data ingestion, feature pipelines, APIs, and downstream consumers often cause AI failures outside the model itself.

- Human-AI interaction and trust. Users must correctly interpret predictions, override decisions when needed, and maintain confidence in AI-driven workflows.

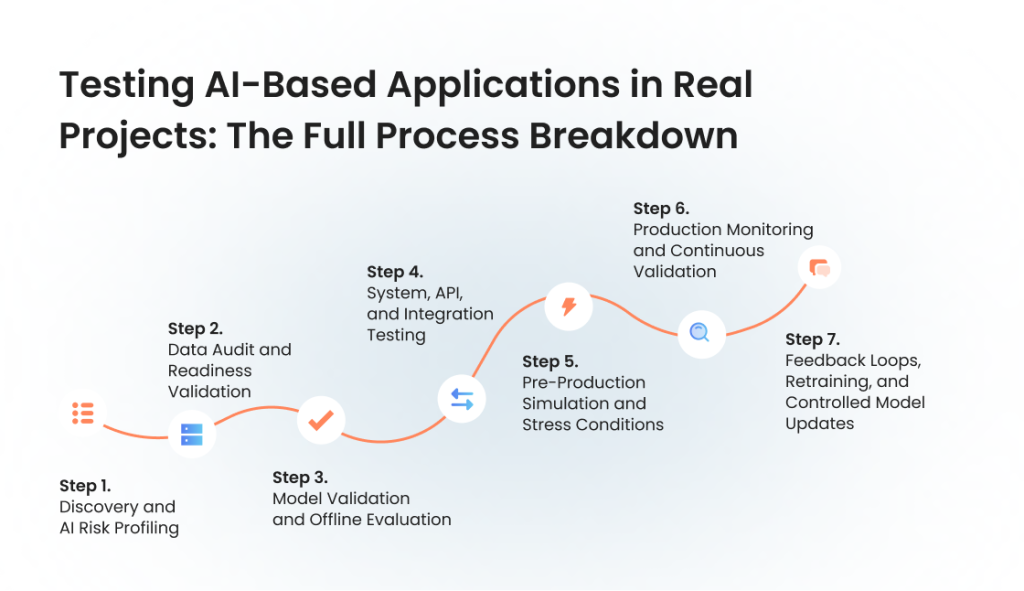

Testing AI-Based Applications in Real Projects: The Full Process Breakdown

In real delivery environments, testing AI-based applications is never a single isolated phase. It is a structured, repeatable process that spans data, models, integrations, and live production behavior. Here is how testing AI applications actually unfolds in practice — not as a theoretical lifecycle, but as a delivery-proven framework used to control business risk.

1. Discovery and AI Risk Profiling

This stage defines what quality actually means for a specific AI-powered application from a business, regulatory, and operational perspective.

What usually breaks:

Teams often begin AI development with unclear quality expectations, vague success metrics, and underestimated business risk. This leads to misaligned validation goals and false confidence in early model accuracy.

What we focus on:

At this stage, we define how the AI application will be used, what decisions it will influence, what level of error is acceptable, and which failures carry financial, legal, or operational impact. This step sets measurable quality thresholds for how to test AI applications across accuracy, stability, fairness, and performance.

Why it matters:

Without early AI risk evaluation, testing efforts become scattered and disconnected from business priorities. This step ensures that the entire testing process is aligned with what actually matters to the organization.

2. Data Audit and Readiness Validation

Here, the goal is to confirm that the data feeding the AI system is reliable enough to support meaningful model validation.

What usually breaks:

Poorly labeled datasets, biased samples, missing values, and mismatches between training and production data are among the most common root causes of AI failure.

What we focus on:

We audit training, validation, and test data for quality, balance, bias, duplication, anomalies, and real-world representativeness. Test data becomes a core testing artifact, not a byproduct of development.

Why it matters:

In testing AI applications, data defects often outweigh code defects. If input data is flawed, no level of model optimization can compensate for the distortion it introduces into AI decisions.

3. Model Validation and Offline Evaluation

This is where the AI model itself is validated in isolation before being exposed to full system complexity.

What usually breaks:

Many AI models appear accurate during early experiments but behave unpredictably outside narrow validation sets. Threshold instability and sensitivity to small input changes are common issues.

What we focus on:

At this stage, we perform focused AI model testing: validating accuracy ranges, confidence behavior, edge-case handling, and prediction stability under varying input conditions. This is also where early performance of AI inference is verified.

Why it matters:

This step ensures that the AI model behaves acceptably before it ever reaches a live environment, reducing the risk of immediate production instability.

4. System, API, and Integration Testing

Once the model is validated, the focus shifts to how it behaves inside the full AI-based system.

What usually breaks:

In production, most AI failures do not originate inside the model. They occur in data pipelines, feature engineering layers, APIs, orchestration logic, and downstream systems consuming predictions.

What we focus on:

We run full integration testing across data ingestion, preprocessing, inference services, and consumer applications. This ensures that AI outputs remain correct, timely, and synchronized across the entire AI-powered application.

Why it matters:

Testing AI systems at a system level prevents failures that pure model validation would never reveal, especially in complex, distributed environments.

5. Pre-Production Simulation and Stress Conditions

This phase recreates real operational pressure before real users are exposed to the system.

What usually breaks:

AI models may behave correctly in controlled test environments but fail under traffic spikes, abnormal usage patterns, partial data loss, or unexpected input combinations.

What we focus on:

We simulate real business conditions using realistic test scenarios: peak user activity, data gaps, extreme values, and unusual operational patterns. This is also where performance testing, reliability testing, and security testing intersect.

Why it matters:

This step exposes the gap between laboratory validation and real-world behavior — the exact space where many AI projects fail after launch.

6. Production Monitoring and Continuous Validation

After release, testing shifts from controlled experiments to real-time quality surveillance.

What usually breaks:

Even well-tested AI models degrade over time due to data drift, concept drift, market changes, and evolving user behavior.

What we focus on:

After release, we apply continuous testing to track prediction stability, performance of AI, decision accuracy trends, and anomaly patterns in live traffic. Shadow testing and controlled rollouts are often used at this stage.

Why it matters:

Testing AI applications does not end at deployment. This step protects the business from silent model decay that can otherwise go undetected for months.

7. Feedback Loops, Retraining, and Controlled Model Updates

This step is aimed at evaluating how safely an AI system evolves after going live.

What usually breaks:

Uncontrolled retraining can introduce new defects even when models improve in isolation. Version overlap, feature drift, and rollback failures are common risks.

What we focus on:

We validate how models are retrained, versioned, deployed, and rolled back. Every update goes through a verified testing lifecycle before replacing a live AI-based application.

Why it matters:

This step ensures that AI improvement does not come at the cost of system stability, compliance, or business trust.

We make sure AI apps work flawlessly in real-life scenarios, not just lab environments.

Post-Deployment AI Software Testing & Monitoring

For traditional software, testing usually slows down after release. For AI-based applications, the opposite is true. Once an AI system enters production, real-world data, user behavior, and external conditions begin to reshape its performance. This is why post-deployment AI software testing and monitoring are not optional extensions of the testing process — they are core mechanisms for protecting business stability, safety, and trust over time.

In practice, many of the most damaging AI failures occur after launch, not before it. Silent accuracy decay, biased decision patterns, performance bottlenecks, and undetected data shifts often surface only under real operational pressure.

Continuous performance and accuracy tracking

After deployment, teams must continuously track whether the accuracy of AI remains within acceptable limits. This includes monitoring prediction stability, confidence score behavior, and error trends across different user segments and scenarios. A gradual decline in the performance of AI is often subtle and cannot be detected through periodic spot checks alone.

Data drift and concept drift detection

Production data rarely remains static. As markets shift, user behavior evolves, and external conditions change, the data feeding the AI system may move away from the original training distribution. Post-deployment testing focuses on identifying both:

- Data drift — changes in input data patterns

- Concept drift — changes in how inputs relate to outcomes

Without active detection, AI models may continue operating while silently making less reliable decisions.

Real-world anomaly and failure pattern monitoring

Many AI failures do not resemble traditional software defects. Instead of clear crashes, teams see abnormal decision spikes, inconsistent predictions, or sudden changes in approval rates, risk scores, or recommendations. Post-deployment monitoring focuses on detecting these behavioral anomalies early, before they escalate into visible business incidents.

Shadow testing and controlled exposure

Before fully rolling out new models or retrained versions, AI teams often use shadow testing and controlled release strategies. The updated model runs in parallel with the live system without affecting real users. This allows teams to compare performance, stability, and decision behavior under real traffic before committing to full deployment.

Production-grade performance validation

Inference latency, throughput, and system responsiveness must remain stable under real loads. Post-deployment performance testing ensures that the AI system’s performance does not degrade during peak usage, batch processing windows, or unexpected traffic surges. Even highly accurate AI can fail operationally if predictions arrive too late to be useful.

Retraining control and regression validation

Retraining is not merely a model improvement exercise — it is also a source of new risk. Every retrained model must go through regression testing to ensure it has not introduced new defects, biases, or performance regressions. Without controlled retraining pipelines, teams risk replacing one problem with another.

Audit readiness and traceability in production

In regulated environments, post-deployment testing also supports auditability. Teams must be able to trace:

- Which model version made a decision

- What data was used at the time

- What validation results were in place during operation

This traceability is essential for regulatory reviews, internal investigations, and compliance reporting.

Why post-deployment testing defines long-term AI success

Most AI failures do not announce themselves with obvious errors. They emerge slowly as prediction quality erodes, biases intensify, or performance degrades under growing load. Post-deployment AI software testing turns production into a controlled validation environment rather than an uncontrolled experiment.

Without continuous monitoring, even well-tested AI-based applications eventually drift away from the conditions they were originally validated against, and the business absorbs the consequences long before engineering teams receive clear warning signals.

Challenges in Testing AI Solutions

Testing AI applications introduces a very different class of challenges compared to traditional software testing. These challenges come not only from technical complexity, but also from the uncertainty of data, evolving model behavior, regulatory pressure, and the high business impact of AI-driven decisions. When teams test AI applications in real production environments, they quickly realize that many classic testing assumptions no longer hold true. Here are the most critical challenges that consistently affect the testing of AI-based applications across different industries.

No single definition of a “correct” result

In traditional software testing, expected outcomes are clearly defined: a function either returns the right value or it doesn’t. In testing AI applications, the situation is rarely that simple. Many AI models operate within probability ranges and confidence thresholds rather than absolute correctness. This makes it difficult to define precise expected results for test cases and forces teams to validate acceptable behavior patterns instead of exact outputs.

Constantly changing test data

AI systems depend heavily on training and production data that rarely remains stable. What qualified as representative test data a few months ago may already be outdated today. New user behavior, seasonal trends, market shifts, and external events all affect incoming data. As a result, maintaining reliable test data and consistent test coverage over time becomes one of the most resource-intensive parts of testing AI applications.

Bias hidden inside the model

Even when overall accuracy metrics appear strong, AI models may still behave unfairly across different user segments, geographies, or behavioral groups. These biases are often subtle and difficult to detect with small validation datasets. Without targeted fairness and bias analysis, AI-based applications risk producing systematically distorted decisions that can lead to legal exposure and reputational damage.

Performance decay after release

Many AI models perform well during pre-release validation but gradually lose accuracy and stability once exposed to real-world data. Data drift, concept drift, product changes, and evolving user behavior all contribute to this decline. This creates a major challenge for teams that rely only on pre-launch testing and do not implement continuous post-deployment validation of AI system performance.

Limited explainability of decisions

In regulated and high-risk environments, it is no longer enough for an AI model to be correct — teams must also explain why a specific decision was made. However, many AI-based applications still function as black boxes. This lack of transparency complicates debugging, compliance audits, root-cause analysis, and internal approval processes.

Synthetic data does not fully reflect reality

Synthetic datasets are widely used to fill gaps in training and testing, especially in rare edge cases. However, models that perform well on generated data often behave differently when exposed to messy, incomplete, or adversarial real-world inputs. This gap between synthetic and production data remains one of the most persistent weaknesses in testing AI systems.

Failures outside the model itself

In practice, a large share of AI failures do not originate inside the AI model. They occur in data ingestion pipelines, feature engineering layers, third-party data sources, integrations, and downstream systems that consume predictions. This makes AI application testing a true system-level task rather than a model-only exercise.

Fragmented AI testing toolchains

Most teams rely on a collection of disconnected tools for data validation, model evaluation, monitoring, and test automation. The lack of unified testing platforms increases complexity, slows down feedback loops, and makes it harder to trace issues across the AI testing lifecycle.

Regulatory and audit pressure

As regulators increase their focus on automated decision-making, testing AI applications must now support auditability, traceability, reproducibility, and accountability. Teams must not only validate outcomes but also preserve clear documentation of data sources, model versions, decision logic, and validation results. This significantly raises the operational burden of AI testing in production environments.

Is Your AI App Ready for Real-World Use?

We’ll help you know for sure.

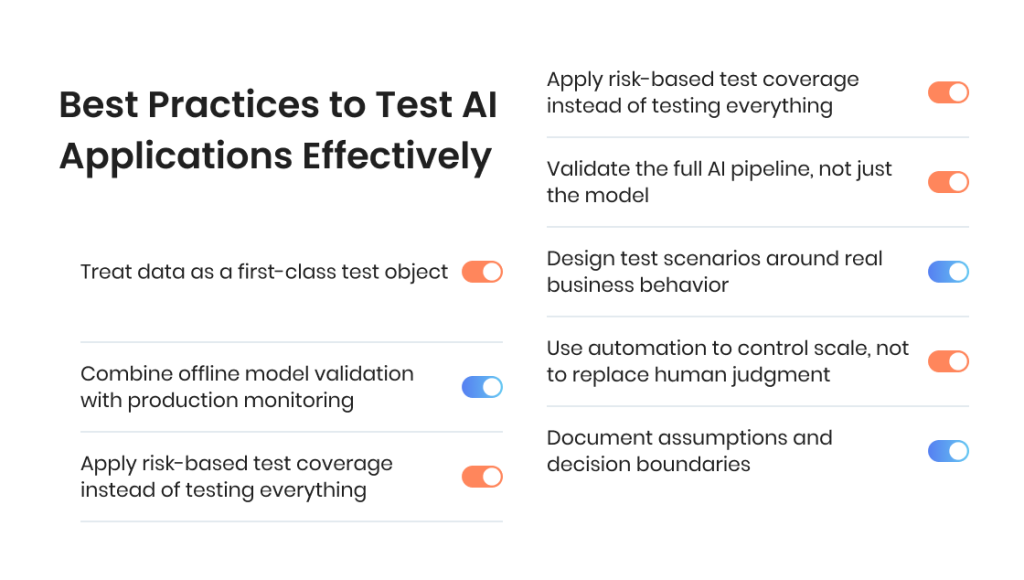

Best Practices to Test AI Applications Effectively

Successful testing of AI applications is not achieved through isolated test activities or one-time model validation. It requires a structured, repeatable, and business-aligned testing approach that spans data, models, pipelines, and production behavior. Based on real-world AI application testing across various industries, the following best practices consistently separate stable AI systems from high-risk deployments.

1. Treat data as a first-class test object

In AI application testing, data quality has the same impact on system behavior as code quality in traditional software. Best practice is to formally include data validation into the entire testing process: checking training, validation, and production data for completeness, bias, outliers, duplication, and distribution shifts. Teams that only test the AI model without thoroughly validating test data often struggle with unstable predictions and unexplained performance drops after release.

2. Combine offline model validation with production monitoring

Offline validation alone is not sufficient to guarantee long-term AI system reliability. AI models may demonstrate strong accuracy during training and pre-release testing but degrade under real-world conditions. Effective teams combine pre-deployment AI model testing with continuous post-deployment monitoring of prediction quality, stability, and performance of AI under live traffic. This ensures that silent failures caused by data drift or changing user behavior are detected early.

3. Apply risk-based test coverage instead of testing everything

Not every AI decision carries the same level of business impact. A core best practice is to prioritize test coverage based on business risk rather than theoretical completeness. Fraud detection, automated approvals, pricing engines, and safety-critical systems demand deeper and more frequent testing than low-impact recommendation features. This risk-based testing approach keeps the entire testing process focused on what truly matters to the business.

4. Validate the full AI pipeline, not just the model

In production, AI rarely operates as a standalone component. Data ingestion, feature engineering, APIs, orchestration layers, and downstream systems all affect the final outcome. Testing AI systems effectively means testing the entire pipeline: from raw input data through model inference to how predictions are consumed by other applications. This end-to-end validation significantly reduces failures that originate outside the AI model itself.

5. Design test scenarios around real business behavior

Generic test cases rarely expose meaningful AI risks. High-quality AI application testing relies on realistic test scenarios that mirror actual user behavior, transaction flows, edge conditions, and abnormal patterns. This includes validating how AI behaves under partial data, extreme values, sudden spikes in activity, and unusual usage combinations that rarely appear in clean historical datasets.

6. Use automation to control scale, not to replace human judgment

Test automation and software test automation play an essential role in AI testing, especially when models are retrained frequently or pipelines change often. Automation enables continuous regression testing across large datasets and repeated validation cycles. However, best practice here is to use automation to control scale while preserving human validation for high-risk decisions, fairness checks, and explainability review.

7. Document assumptions and decision boundaries

Many AI failures originate not from incorrect code but from hidden assumptions about data, users, or operating conditions. Efficient testing teams explicitly document model assumptions, accepted error margins, confidence thresholds, and decision boundaries. This allows stakeholders to clearly understand under which conditions the AI-based application is expected to perform reliably and when human review is required.

Our Experience With Testing AI Systems

Over the past year, we tested several different AI assistants and AI-powered solutions developed for the same enterprise client with a large ecosystem of development and testing tools. While each assistant served a different function, their shared architecture and integration approach allowed us to observe repeatable behavior patterns, systemic risks, and real operational limits that do not appear in isolated pilot projects. Here are our most valuable takeaways from that experience.

1. Context memory breaks faster than expected

Across multiple assistants, short-term session memory worked acceptably, but long-term context was unstable. Users repeatedly had to re-explain goals and constraints after breaks or across different days. This gap between expected “assistant continuity” and actual behavior became one of the most consistent UX and productivity issues we observed.

2. Proactivity is far weaker than users assume

Most assistants responded to direct questions but showed little understanding of user goals beyond the immediate prompt. They rarely guided users forward or adapted responses based on broader task context. This limited their practical usefulness in complex professional workflows and became visible only through long-session, scenario-based testing.

3. Edge cases expose shallow reasoning

When users asked questions that required inference beyond platform documentation, assistants frequently returned irrelevant answers, repeated prompts, or fell back to generic responses. In practice, this revealed that some systems still behave closer to structured chatbots than true analytical agents, despite advanced positioning.

4. Performance weakens under sustained professional use

During heavy testing sessions with dozens of consecutive queries, we observed a growth in delays, duplicated outputs, and stale responses. These issues did not appear in light usage scenarios but became critical under intensive professional workloads. This confirmed the importance of long-session testing and sustained load validation for AI assistants.

5. Model isolation creates unavoidable vendor risk

In most cases, neither documentation nor support teams could clearly confirm which models powered the assistants, what request limits applied, or where performance bottlenecks originated. This lack of transparency forced a black-box testing approach and introduced strong dependency on external model providers and API stability.

6. Integration failures outweigh model defects

Some of the most disruptive failures originated not inside the AI logic but in API connectivity, server-side processing, third-party outages, and infrastructure instability. When failures occurred outside the client’s direct control, recovery depended entirely on the external provider. This reinforced the need for monitoring, provider status visibility, and user-facing failure communication.

7. Localization turned out to be a behavioral issue

Localization challenges went far beyond translation quality. Cultural expectations, tone interpretation, and mental models of how an assistant “should behave” strongly influenced perceived quality and trust. What felt helpful in one region often felt confusing or misleading in another, making localization a core part of behavioral validation.

How this experience reshaped our AI testing approach

This multi-assistant testing effort fundamentally reshaped how we approach AI validation. We now place far greater emphasis on extended sessions, performance degradation over time, memory stability, and external dependency risks. We also define capacity limits, failure behavior, and recovery expectations with clients far earlier in the delivery process.

Another valuable lesson we learned is that the most important outcome was not a list of defects, but a shift in how AI is treated inside products. For this client, AI has moved from an experimental feature to a production-critical system that requires the same engineering discipline, monitoring intensity, and risk control as any other core software component.

60% fewer hallucinations, 82% reply accuracy achieved: How we tested an AI assistant for a CI/CD platform

Final Thoughts

As AI continues to evolve from experimental add-ons into increasingly complex, critical systems, the role of testing is changing just as fast. In 2026, testing AI applications is no longer about verifying isolated features — it is about controlling uncertainty, managing long-term risk, and protecting the reliability of automated decision-making at scale. Teams that treat AI testing as a one-time validation step inevitably face silent degradation, trust issues, and costly late-stage failures.

Organizations that succeed with AI are those that approach quality as a continuous discipline spanning data, models, integrations, and real-world behavior. With the right strategy, testing becomes not a blocker for innovation, but a stabilizing force that allows AI-based applications to grow safely, predictably, and with full accountability to the business.

FAQ

What is the difference between testing AI applications and traditional software testing?

What is the difference between testing AI applications and traditional software testing?

Traditional software testing validates fixed logic with predictable outcomes. Testing AI applications focuses on probabilistic behavior, data quality, and evolving models. Instead of only validating features, teams must continuously assess AI model accuracy, decision stability, and how the AI system performs as data and usage patterns change.

How to test AI and ML applications to ensure correct results?

How to test AI and ML applications to ensure correct results?

To test AI and ML applications, teams validate behavior across acceptable ranges instead of exact outputs. This includes statistical accuracy checks, confidence threshold validation, trend analysis, and large-scale scenario testing using representative test data to ensure consistent and reliable AI decisions.

What types of testing are most important for AI-powered applications?

What types of testing are most important for AI-powered applications?

The most critical types of AI testing include data validation, AI model testing, integration testing, performance testing, security testing, and reliability testing. Together, they ensure that AI-powered applications remain accurate, stable, secure, and operationally reliable under real production conditions.

Can automation fully replace manual testing when you test AI apps?

Can automation fully replace manual testing when you test AI apps?

Test automation plays a vital role in software test automation for AI pipelines, regression cycles, and large-scale test scenarios. However, human validation remains essential for fairness checks, explainability reviews, high-risk decisions, and complex edge cases where business judgment is required.

How to test AI-based applications that rely heavily on real-time data?

How to test AI-based applications that rely heavily on real-time data?

When teams test AI applications with real-time data, they focus on integration testing, latency validation, anomaly detection, and continuous performance monitoring. Shadow testing and controlled releases are often used to evaluate how the AI system performs under live traffic without exposing users to unnecessary risk.

How to test agentic AI applications that make autonomous decisions?

How to test agentic AI applications that make autonomous decisions?

To test agentic AI applications, teams must validate not only individual model outputs but also goal-setting logic, action sequencing, feedback loops, and failure recovery behavior. Scenario-based testing, controlled simulations, and post-deployment monitoring are essential because these systems adapt their behavior in real time.

Jump to section

Hand over your project to the pros.

Let’s talk about how we can give your project the push it needs to succeed!