AI Assistant Quality Audit for a CI/CD Platform

Helping a leading CI/CD platform identify 25 critical defects, reduce hallucination frequency by 60%, and establish a continuous testing framework that improved reply accuracy from 65% to 82%.

About Project

Solution

Functional testing, Usability testing, Localization testing, Performance testing, Security testing, Automation testing, Adaptability testing, Proactivity testing, Hallucination detection testing, Explainability testing, Transparency testing

Technologies

JMeter, Postman, Selenium, Jira, TestRail, OWASP ZAP

Country

United States

Industry

Client

The client is a leading CI/CD platform serving thousands of development teams worldwide. They introduced an AI assistant to help users configure delivery pipelines, troubleshoot issues, and access documentation more efficiently. The company needed an independent quality audit to identify improvement opportunities before wider rollout.

Project overview

Your app’s quality is our top priority.

Before

- Limited context awareness

- No proactive responses

- Inconsistent accuracy

- Frequent hallucinations

After

- Context-driven replies

- Proactive suggestions

- Stable, verified outputs

- Reduced false responses

Project Duration

8 weeks

Team Composition

3 Manual QAs

1 Automation QA

Challenge

The AI assistant was launched to simplify CI/CD setup and troubleshooting, but it struggled to meet user expectations for intelligence and reliability. While developers appreciated its quick access to documentation and code snippets, they also encountered issues with adaptability, accuracy, and continuity. Without proactive guidance or substantial memory, the assistant often felt like a searchable help page rather than an intelligent collaborator.

To understand and address these limitations, the client was looking for an independent QA review to evaluate the assistantâs real-world performance, identify key improvement areas, and build a standards-based roadmap for enhancing developer experience and trust.

Key challenges included:

- Undefined quality metrics or performance baselines

- Limited adaptivity to user expertise and workflow context

- Lack of proactive guidance or next-step suggestions

- No memory retention between sessions

- Inconsistent accuracy and occasional hallucinations

- Weak localization and accessibility readiness

- Absence of automated regression testing

- Missing security safeguards for sensitive or prompt-injected data

Solutions

TestFort conducted a comprehensive 8-week AI quality audit combining manual exploratory testing, automated regression, and usability validation. Our team evaluated the assistant’s adaptability, context awareness, and explanation quality using real developer workflows that mirrored production CI/CD environments. We compared the AI’s behavior across different user personas and technical scenarios to measure consistency, proactivity, and accuracy under realistic conditions.

Based on these findings, we implemented a data-driven improvement plan aligned with ISO/IEC 25010:2023 standards and AI evaluation best practices. The project delivered a full set of test assets, automation coverage, and actionable recommendations that helped the client enhance reliability, performance, and user trust in the assistant.

- Exploratory testing across 15-20 real CI/CD scenarios. We recreated authentic developer workflows such as build setup, test optimization, and error resolution to evaluate how well the assistant supported end-to-end development.

- Persona-based validation for beginner, intermediate, and expert users. Each testing persona was used to assess whether the AI adapted its communication style, technical depth, and clarity to the user’s level of expertise.

- Hallucination and response-consistency testing across repeated prompts. We checked the AI’s stability by asking the same questions multiple times, identifying when it generated incorrect or fabricated responses.

- Security and privacy validation against prompt injection and data leaks. Testers attempted to extract sensitive data and observed the assistant’s handling of unsafe or corrupt input to ensure user data remained secure.

- Usability testing with 5-8 real users. Participants evaluated the assistant’s helpfulness, clarity, and responsiveness during typical setup and troubleshooting tasks, providing valuable qualitative insights.

- ISO/IEC 25010:2023 and ISO 9241-110:2020 compliance analysis. The product’s quality and usability were benchmarked against recognized international standards to identify objective improvement areas.

- Automated regression suite with 15-20 core test cases. We developed and implemented automated scripts to verify that future model updates wouldn’t reintroduce previously fixed defects.

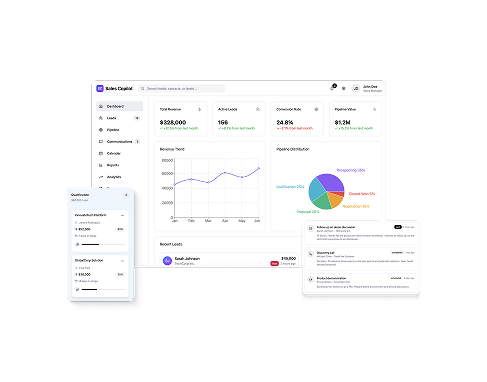

- Continuous monitoring dashboard for AI accuracy and response time. A metrics dashboard was set up to track response quality, latency, and consistency in real time, providing visibility into ongoing performance trends.

Technologies

The tools used on the project allowed the team to track AI quality trends, validate response data, and conduct structured evaluations. This tech stack supported flexible experimentation, transparent reporting, and efficient collaboration between QA engineers and data specialists throughout the audit.

- JMeter

- Postman

- Selenium

- Jira

- TestRail

- OWASP ZAP

Types of testing

Security testing

Detecting vulnerabilities, unsafe prompts, and potential data exposure risks.

Results

The 4-week audit provided the client with a complete picture of the AI assistant’s current quality and its impact on developer workflows. By combining manual, automated, and user-based testing, TestFort identified key limitations in adaptability, proactivity, and security while delivering actionable improvements backed by measurable data. The resulting recommendations and test assets formed a foundation for continuous quality monitoring and faster iteration cycles.

Following the implementation of the proposed fixes and test automation, the platform achieved significant improvements in response quality, reliability, and user satisfaction, creating the groundwork for a more trusted, production-ready AI assistant.

critical AI defects identified

reduction in hallucinations

accuracy improvement

boost in user satisfaction

Ready to enhance your product’s stability and performance?

Schedule a call with our Head of Testing Department!

Bruce Mason

Delivery Director