AI Testing Services

AI Product QA

With specialized AI model testing, data validation, and bias detection, we help you ship reliable AI applications that perform consistently in real-world scenarios.

Why Your AI Product Needs Specialized QA

Up to 40% of dev time gets wasted debugging unpredictable AI behavior.

Just 1 wrong output can damage user trust or trigger compliance risks.

80% of AI project failures happen due to poor data quality and lack of testing oversight.

Thousands of inputs, millions of paths — you need AI testing automation for that.

AI Product Testing ≠ Software Testing

AI models behave unpredictably, learn from user data, and fail in ways traditional software never does. You need testing designed for these unique challenges.

Traditional Software

- Predictable outputs

- Fixed logic

- Clear pass/fail criteria

AI Products

- Variable outputs

- Learning logic

- Context-dependent results

Our AI Testing Services

Data testing

Validate input quality, preprocessing, and how model outputs change based on different data types and volumes.

Model validation

Test ML and GenAI models for accuracy, consistency, and robustness — including edge cases and retraining behavior.

Functional testing

Check that AI-powered features work reliably across platforms, ensuring stable logic, APIs, and user flows.

Output review

Assess generated results for safety, bias, and usability — flagging hallucinations or unacceptable outcomes.

Security checks

Test for adversarial attacks, data leakage risks, and model misuse — protecting your AI from intentional harm.

Explainability testing

Verify model transparency, traceability, and behavior alignment — helping teams meet compliance and ethical standards.

Expert AI Testing: Your Competitive Advantage

AI-Specific QA Frameworks

Traditional testing misses AI-specific risks like model drift and data bias.

Our specialized frameworks validate data quality, model behavior, and system stability, ensuring your product is reliable and ready for the real world.

Predictable Performance

Unpredictable AI behavior wastes valuable dev time.

We help you take control by ensuring your model performs consistently and predictably under any condition, so you can build with confidence and ship faster.

Unbiased Outcomes

Your product must work fairly for all users.

We perform rigorous bias analysis to guarantee your models deliver ethical and equitable results, protecting your brand’s reputation and building user trust.

Minimized Hallucinations

False or fabricated outputs can quickly erode user confidence.

We use targeted methods to identify and minimize “hallucinations,” ensuring the accuracy and reliability of your AI’s generated content.

Superior User Experience

Users expect a flawless product with predictable UX flows.

We test how your AI features integrate with the user interface and data, guaranteeing a smooth and intuitive experience that keeps users engaged and satisfied.

Cost-Efficient QA at Scale

Scaling an AI product without proper testing can be expensive.

Our services help you manage resources and costs efficiently, ensuring high quality that grows with your business, not against it.

“When building an AI product, you’re constantly worried about it saying something wrong or offensive. We knew we couldn’t just hope it worked. TestFort’s team was great at pointing out things we missed, like those subtle biases that could have become a PR issue. They’ve built a testing pipeline based on modern frameworks specifically for our AI app and gave us the confidence to launch.”

Chief Product Officer, Project Planning Tool

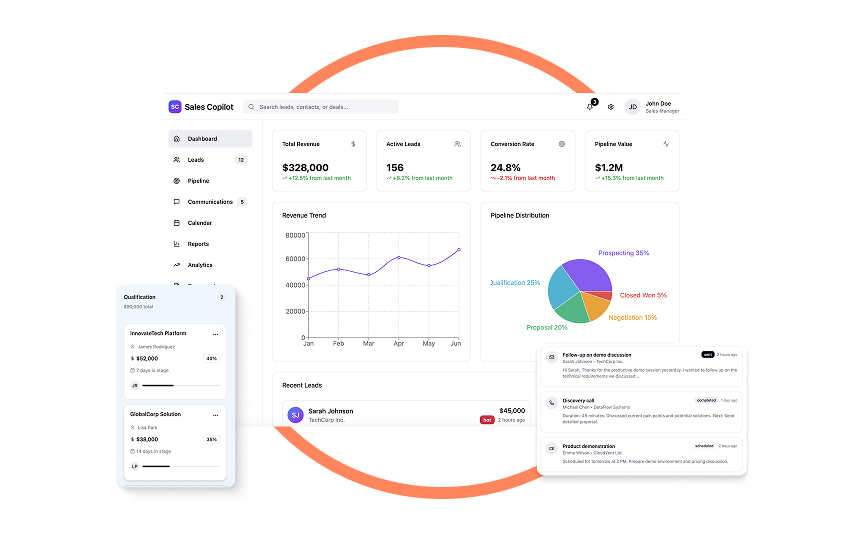

Our Latest AI Product Testing Cases

Testing AI Models and AI Applications by Type

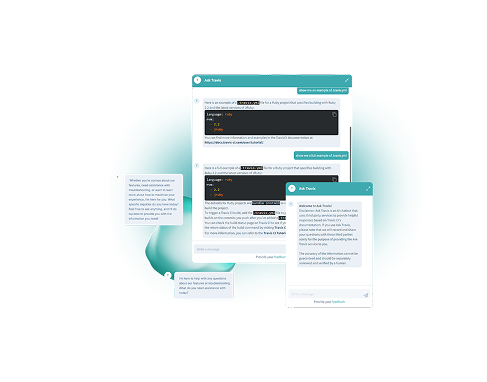

GenAI products

Chatbots, copilots, content generation tools, and LLM-based assistants — tested for prompt reliability, hallucinations, and safe user interaction.

ML-powered systems

Recommendation engines, fraud detection, demand forecasting, risk scoring tools, and other real-time machine learning systems.

Agentic AI

Autonomous agents that plan, act, and learn with limited human input — validated for outcome reliability, safety boundaries, and control flow.

NLP & voice AI

Language models, sentiment analysis tools, voice recognition systems, and multilingual AI — tested across intent accuracy, tone, and edge-case inputs.

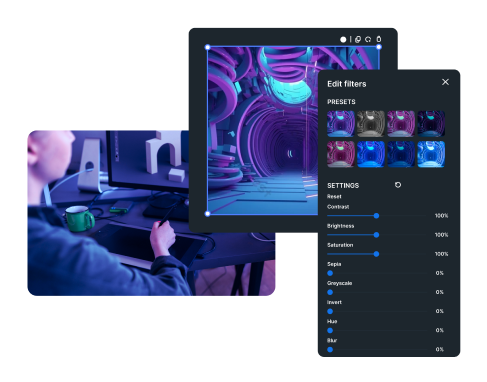

CV & vision models

Image recognition, object detection, OCR, and real-time video analysis — tested for accuracy, performance, and adaptability across device types.

Enterprise AI

AI systems used in finance, healthtech, logistics, and other complex environments — tested for reliability, compliance, and integration with critical workflows.

Get Your Role-Specific AI Product Testing Checklist

Don’t guess what to test. Our comprehensive checklist breaks down the most critical AI QA tasks for your role — from validating models and data to ensuring unbiased outcomes.

Our AI ML Testing Approach

Standard software testing misses AI-specific risks like model drift, bias, and adversarial attacks. Our AI testing methodology validates model accuracy, data quality, and system reliability using approaches designed for machine learning systems.

Model-aware testing strategy

We tailor our artificial intelligence testing approach to your specific AI architecture — from LLMs and natural language processing models to computer vision, time-series prediction, or rule-based hybrids.

- Performance testing optimized for your AI system’s requirements

- Custom testing frameworks for different AI model types

- Specialized validation for generative AI applications and ML models

- Testing methodologies adapted to your AI solution’s complexity

Data-driven validation

We simulate diverse input conditions using synthetic data, noisy datasets, edge cases, and adversarial scenarios — validating output accuracy, detecting bias, and ensuring stability across different data quality conditions.

- Data quality assessment throughout the testing process

- Comprehensive testing with clean and corrupted data inputs

- Edge case validation using amounts of data your system will encounter

- Adversarial testing to identify potential security vulnerabilities

Security & Robustness testing

AI systems face unique security challenges. We test for prompt injection, data poisoning, model extraction attacks, and other AI-specific vulnerabilities that could compromise your system.

- Testing AI applications for data privacy and secure processing

- AI-driven security testing for prompt injection and adversarial attacks

- Model robustness validation against malicious inputs

- API testing for AI endpoints and data access controls

Explainability & Compliance checks

Where required, we test for traceability and explainability. We validate model predictions, alignment with business expectations, and compliance with ethical AI artificial intelligence software testing and market guidelines, including regulatory requirements.

- Bias detection and fairness validation across user groups

- Model behavior analysis and prediction validation

- Testing helps ensure AI decisions are transparent and justifiable

- Compliance testing for AI regulations and industry standards

Feedback loop & Integration testing

We test how your intelligent systems behave when live inputs change the model over time — validating retraining processes, protecting against model drift, and ensuring seamless integration with existing software.

- Continuous monitoring setup for production AI performance

- Testing AI systems within broader DevOps pipelines

- Model drift detection and retraining validation

- Integration testing for AI-based components and APIs

User experience & Output validation

AI-powered doesn’t mean user-proof. We test how your AI behaves in actual user flows across different channels — ensuring responses are accurate, usable, safe, and consistently helpful.

- Cross-platform testing for AI applications and services

- Manual testing of AI interactions and user scenarios

- Output quality validation for generative and predictive AI

- User interface testing for AI-driven features

The Impact of Risk-Focused AI Product Testing

For the Head of QA

Gain full control and confidence over your AI pipeline by managing key risks like model bias and drift for every release.

For the CEO

Mitigate brand and business risks, from compliance failures to security issues, so you can scale and grow with confidence.

For the Product Manager

Ensure a superior user experience by eliminating hallucinations and guaranteeing your AI product performs exactly as designed.

For the Dev Team

Get clear, actionable bug reports that help you quickly fix unpredictable AI behavior and get back to building.

Need to discuss a specific AI project testing or your current AI QA framework? We’re here to help.

Our Awards and Achievements

Why TestFort for AI Testing Services

Adopting new tech since 2001

Over two decades of QA experience means we quickly understand new technologies like AI and build effective testing strategies.

Smart

coverage

From model validation to bias testing — we cover all aspects of AI quality assurance using proven testing methodologies.

Real-world AI

testing

We test with realistic data and scenarios, not just clean lab conditions — ensuring your AI works for actual users.

Flexible QA

models

Custom testing strategies for your AI stack — whether you need model validation, full-cycle QA, or specialized generative AI testing.