Ever since the onset of COVID-19, the OTT, or over-the-top media industry has been booming, with dozens of new software products appearing on the market. However, some OTT apps have been around longer than that and already have millions of loyal users. We’ve had the pleasure of testing one of those apps for several years now. Today we are sharing our experience and what we’ve learned over the course of our collaboration.

Our history with the project

We joined the project nine years ago when it already was a well-known product in the media industry with almost fifteen years in the market. We started out with two people from our company working on the project and gradually grew to eighteen team members as of today. Our scope of responsibilities grew along with the team size, but even when there were just two QA engineers, we put a lot of effort into our work.

Why it’s so important for us to do a good job

The product we are testing is a force to be reckoned with in the media and streaming industry. The iOS version of the app is frequently featured by Apple during its media-related sections of the presentations. Moreover, the application has over 100,000 radio stations for any topic and personal preference. That alone is a big incentive for us to do our best day in and day out.

It goes without saying that we approach every project with maximum responsibility, regardless of the industry, project size, or other factors. However, testing a product that has over 75 million monthly users certainly brings an additional level of responsibility. And with our carefully designed approach and strong QA expertise, we are more confident in the quality of the application than ever.

What does an OTT app need to be great?

The success of an OTT app can be measured by several vital metrics. However, in our OTT testing process, we use our own set of criteria for determining whether the product is ready for release. The key thing we are looking to ensure is a good user experience, which, in turn, consists of numerous smaller items, from a clear and appealing user interface to the way the application acts when there is an incoming call or a text message.

However, there is more to the success of an OTT software product than an attractive UI, and it’s not always the things you can spot with a naked eye. While testing the application, we will always look at how it behaves under a changing internet bandwidth, how user data is stored and managed, and how the streaming works on platforms that are not as common, such as car stereo systems. Only when the quality criteria are met, the product can expect a decent market position and positive audience reception.

Our approach to testing OTT software products

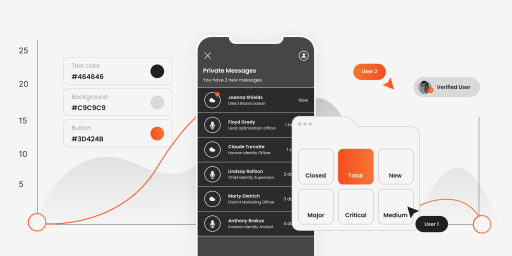

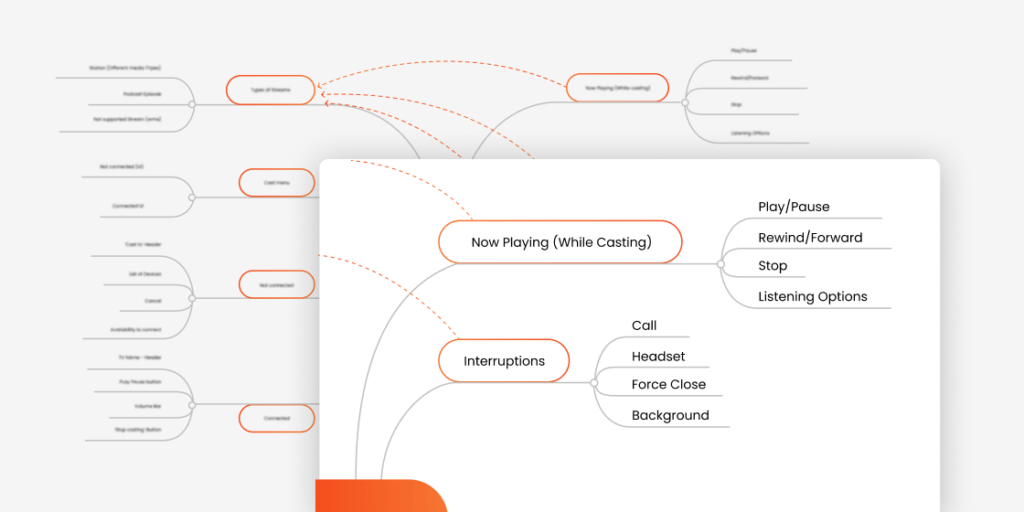

From the very beginning, we set out to develop the most effective approach that perfectly meets the needs of a popular media app that continues to grow. The result is a strategy that we have used for years and continue using to this day. The key tool we use under this strategy is creating mind maps. Visualizing the scope of work helps us better understand the challenge and make sure that the key quality criteria of the application are included in the strategy.

This strategy allows us to break the whole application into smaller fragments and test each one with maximum precision and efficiency. These fragments are interconnected and include:

- User action

- Parameter

- Value

We have found that every component of an application, whether it’s an OTT product or another software solution, can be broken down into the same pattern of Action → Parameter → Value. For example, when we need to test the streaming feature, streaming an audio file becomes the Action. The Parameter in this situation can be the audio format. Subsequently, the Value can be MP3, FLAC, WMA, and so on. Other examples of the Action → Parameter → Value logic include:

- Action: Share

- Parameter: Social Network

- Value: Facebook, Twitter, etc

————————————————

- Action: SharePlay

- Parameter: Type of Content

- Value: Radio Station, Podcast, etc

————————————————

- Action: Change Stream Quality

- Parameter: Stream Quality Options

- Value: High Bitrate, Medium Bitrate, etc

It’s worth noting that even the strongest testing strategy does not mean that 100% of bugs will be detected. To us, its biggest value is the ability to perform deep analysis and ensure comprehensive test coverage. And when a bug is discovered after all, we can quickly find other product areas that can be affected.

Each parameter and value can make a noticeable difference in the correct operation of the app and user experience, which is why testing even the most minor features is essential.

Get to know our OTT testing expertise and approach

Process and methodology of testing OTT apps at TestFort

As we’ve mentioned earlier, the product has grown a lot over the years, and so has our scope of responsibilities. To keep everything under control and check every single feature, environment, and test case, we have divided our entire team into four main subteams. They include:

- iOS team

- Android team

- Web team

- Platform team

In addition to that, we also have a fifth subteam that works on the same schedule as the development team that is located in the US and therefore has a significant time difference with our regular QA team members. That way, the fifth team is perfectly in sync with the developers and also fully aware of the work on our side, which leads to better communication, more efficient testing, and, eventually, higher quality of the product.

Over time, we also found that a pull request-based testing strategy works best for our purposes. With each change in the application being a separate build, testing the product in its pull request stage prevents the bugs from making it into the main build, increasing the quality of the app and allowing us to effectively deal with arising issues before the cost of the issue becomes too high. Once we have performed comprehensive testing of the changes in the code at the pull request stage, we authorize it to be merged further, included in the release candidate build, and eventually in the production build, where it’s ready to be interacted with by the users.

Our preferred methodology

After almost a decade of working with the same OTT product, we have concluded that the Agile methodology and Scrum framework are the best options for our product. This is a methodology we are all familiar with and an approach that was actively used on the client’s side before we even came on board.

Two-week sprints and regular Scrum events help us structure our work with maximum efficiency. The two most important Scrum events for us are:

- Daily standups. During these calls, which always happen with everyone’s camera turned on, we not only synchronize our efforts and plan the work for the day, but also maintain close ties within the team despite working remotely for the past 2+ years.

- Sprint retrospective. This event takes place at the end of each sprint and allows us to evaluate our work over the past two weeks, e.g. see where we did well and what can be improved in the next sprint.

Tools and specific actions we use for OTT testing

In many aspects, testing an OTT product is similar to testing other types of software. It requires the same precision, attention to detail, and familiarity with QA best practices. Still, there are many ways in which OTT testing requires a unique expertise, and that includes knowledge of specific tools and actions used in testing media products. These are some of our most used testing actions:

- Interruptions — an action that allows us to see how the application reacts to possible interruptions in the stream, such as a smartphone notification. Here we also look at the less obvious stream parameters, such as plugging in wired headphones or connecting a Bluetooth headset.

- Throttling — an approach used for checking network connections and how issues with connectivity can impact the performance of the application. We use Charles Proxy for this purpose.

- Customer engagement simulation — the process of seeing the product through the eyes of a regular customer, including user communication via push messages or in-app messaging, with the help of Braze.

Types of testing we use

Our approach to testing OTT products is based on exhaustive attention to even the most minor features of the app, but it’s definitely possible to break down our process into the types of testing that everyone in the software industry is familiar with. These are the types of testing we use most often when testing OTT applications:

- UI/UX testing — to check the way users can interact with the product and whether they are happy with the interface, navigation, and other features.

- Exploratory testing — a type of testing where the team has free rein over which aspects of the product need to be tested and how it should be done.

- Functional testing — to make sure that the product is doing everything it’s supposed to do and that the app works flawlessly across all platforms.

- User acceptance testing — to test the system as a whole and make sure that the latest version of the product is ready to be released to the public.

- Sanity testing — to verify that the recent code changes did not negatively affect the specific functionality of the application.

- Smoke testing — to evaluate the state of the whole application after recent code changes to ensure that critical functionality is working as intended.

- Compatibility testing — to make sure that the application works properly regardless of the device, operating system, or configuration.

- Integration testing — to check how different software modules are integrated into the system and how the newly updated system operates.

- Localization testing — to ensure that the application complies with regional requirements and that its users in different parts of the world can get the same great experience.

- Accessibility testing — to determine whether the application can be successfully used by people with disabilities, such as hearing or visual impairments.

Testing on physical devices vs. testing on virtual machines: What’s the difference and which one is the better option?

Many software testing companies and teams are no strangers to device simulators and emulators. They are presented as an affordable, quick way to increase platform coverage. Indeed, virtual machines can be a great option for when you need to test a software application on different devices and platforms that you may not necessarily have physical access to.

However, we will always choose testing on physical devices over even the most high-quality emulators. For this project, we use dozens of smartphones, tablets, and laptops with a wide range of characteristics, operating systems, screen resolutions, and other parameters. We test on flagship devices and older gadgets. Testing on physical devices allows us to:

- Simulate real-user behavior and see the application through the eyes of a user

- Use real-world scenarios for all-encompassing testing

- Notice minor UI, performance, and other issues that are not that easy to notice with virtual devices

- Add a new device to our roster in a matter of seconds, without any lengthy preparation and setup process

- Save time on effective and accurate testing, which results in faster build times

Clearly, testing on physical devices has a lot of advantages over doing it on virtual machines. The difference is especially apparent when it comes to testing an OTT product — a lot of its potential success depends on an engaging UI and positive user experience, and that is something you can only test in full with a roster of physical devices.

Automating OTT testing: How and why to do it?

Automated testing has a lot of potential when it comes to OTT products. It saves time and resources on tests that need to be run every day — for example, to execute full regression testing with maximum efficiency. Automating tests allows us to spot critical bugs in the early stages of testing. This includes functional parameters, UI parameters, and a few other essential aspects of QA.

Our team is also proficient in testing automation and actively uses tools like Selenium, TeamCity, and Appium to cover more of our testing needs in less time with Java as our main programming language for automating the tests. Over the years, we have been involved both in web and mobile testing automation. For example, there are 1,250 tests run automatically just for the web version of the product every day, and it would take a manual QA approximately a month to cover all of them.

Moreover, we use automation testing to process system reports more efficiently. Automated tests help us make sure that a report is created and sent correctly after it’s triggered by a specific user action. In the near future, we are also planning to launch automated localization testing — because the product is available in 11 different languages, automated localization tests are the most efficient way to verify the compliance of the app with local language requirements.

However, there is one thing we discovered in the course of testing the product for more than nine years: a combination of manual and automated testing is the one that delivers the strongest results. Automated testing follows the same sequence of steps time after time, whereas with manual testing we can quickly react to possible changes. Manual testing requires us to use our analytical skills and set the right priorities. So it’s not the question of whether manual or automated testing is right for a project — it’s a question of combining both for a winning strategy.

What helps us be good at testing OTT products

Here at TestFort, we firmly believe that in order to be good at something, you need to be passionate about what you’re doing. And in the case of this project, which largely has to do with music, the stars aligned perfectly, as the team working on it is an exceptionally musical one. There are musicians, DJs, and music aficionados who use the app on a daily basis. As active users of the app, and not just detached QA engineers, we are able to evaluate the user experience more effectively and make a real difference in the quality of the product.

Another integral component of our work and the thing that helps us excel in testing the product is how well we are gelled as a team. We know that teamwork is sometimes viewed as a tired buzzword in the tech world, but it’s genuinely the best way to describe our modus operandi.

We started out working from the same office, and even though that has not been the case for over two years — first due to the COVID-19 lockdowns and then due to the war — we continue fostering an open and effective relationship within the team. And it’s not just work-related — it’s also personal.

From daily meetings with cameras for better communication to encouraging each other’s hobbies and interests, we are convinced that the better we communicate as a team, the better we are at our job. And the results of our work over nine years only confirm it. To help with fostering strong relationships within the team, we also have a virtual coffee break room, which is always open to those who want to catch up with their colleagues and discuss anything in the world.

Final thoughts

OTT software products have a huge potential and are one of the fastest growing tech industries. And we at TestFort are well-equipped for the inevitable dominance of OTT technology. We know what an OTT application needs to win over the right kind of audience and how to bring the quality of the product from good to great. Entrust testing of your OTT application to us and expect to be absolutely satisfied with the results!