Created by: Igor Kovalenko, QA Team Lead and Mentor, TMMi expert. Sasha Baglai, Content Lead.

Summary: A 2025 guide to implementing the testing maturity model (TMM) framework to assess your current testing processes and systematically improve from reactive chaos to predictable, scalable quality control.

Best for: CTOs, QA managers, development team leads, engineering managers, QA engineers, DevOps engineers, software architects.

Your testing process is either helping you ship faster or holding you back. There’s no middle ground.

Most teams test like they’re putting out fires. Something breaks, they write a test. A release fails, they add more checks. Then they wonder why every deployment feels like a gamble.

Mature testing works differently. You know exactly what will break before it breaks. Your releases ship on schedule because quality is built in, not bolted on afterward.

The difference? Systematic processes that work the same way across every team and project.

Here’s what changes when testing becomes mature:

Delivery becomes predictable. You stop padding estimates for “testing time” because you know your processes will catch problems before they become blockers. Release planning shifts from conservative guessing to realistic commitments.

Risk changes type, not just amount. You catch integration issues in week 2 instead of week 8, but you also discover that your feature doesn’t solve the actual user problem. Your risk becomes strategic instead of operational.

Cost structure changes as you grow. Immature testing gets more expensive with every new feature. Mature testing costs more upfront but stays consistent as your codebase expands. You can finally say yes to “quick changes” without breaking everything else.

The Testing Maturity Model provides a structured approach to get there. Five defined levels, measurable criteria, and practical methods that scale with your organization.

Tired of unpredictable releases and last-minute testing panic?

Our TMMi-based approach transforms reactive testing into stable, scalable quality processes for faster delivery.

What Is Test Maturity and Why It Matters

Most teams test reactively. Something breaks, they write a test. A release fails, they add more checks. This creates chaos.

Mature testing means you have repeatable processes that work the same way across teams and projects. Your testing integrates with development from day one, not as an afterthought.

Here’s what mature organizations actually do differently:

- They plan testing during requirements, not after coding;

- Their test processes are documented and consistent;

- They measure what matters: defect prevention, not just detection;

- They improve their testing based on data, not gut feelings.

In a nutshell: Testing Maturity Model (TMM) gives you five clear levels to assess and improve your testing processes. Level 1 teams test reactively when things break. Level 5 teams prevent problems before they happen. Most organizations operate at Level 1-2 and can reach Level 3 within 12-18 months with systematic effort. The result: predictable releases, fewer production issues, and testing that scales with your development speed instead of slowing it down.

What testing maturity actually measures

Delivery confidence changes everything. With mature testing, your team stops being conservative with release timelines. You can commit to aggressive deadlines because you know your testing will catch problems before they become blockers.

This shifts how you plan sprints and roadmaps. Instead of padding estimates for “testing time,” you build with confidence that quality is baked in.

Risk profile shifts, not just reduces. Mature testing eliminates technical risks but surfaces business risks earlier. You catch integration issues in week 2 instead of week 8, but you also discover that your feature doesn’t solve the actual user problem.

Your risk becomes strategic instead of operational. That’s actually harder to manage but infinitely more valuable.

Cost structure inverts. Immature testing costs more as you scale. Every new feature multiplies your testing debt. Mature testing costs more upfront but stays flat as your codebase grows.

You can finally say yes to the “quick changes” that business teams request without breaking everything else.

How test maturity impacts delivery, risk, and cost

With mature testing, your team stops being conservative with release timelines. You can commit to aggressive deadlines because you know your testing will catch problems before they become blockers.

This shifts how you plan sprints and roadmaps. Instead of padding estimates for “testing time,” you build with confidence that quality is baked in.

Risk profile shifts, not just reduces. Mature testing eliminates technical risks but surfaces business risks earlier. You catch integration issues in week 2 instead of week 8, but you also discover that your feature doesn’t solve the actual user problem.

Your risk becomes strategic instead of operational. That’s actually harder to manage but infinitely more valuable.

Cost structure inverts. Immature testing costs more as you scale. Every new feature multiplies your testing debt. Mature testing costs more upfront but stays flat as your codebase grows.

The real cost impact? You can finally say yes to the “quick changes” that business teams request without breaking everything else.

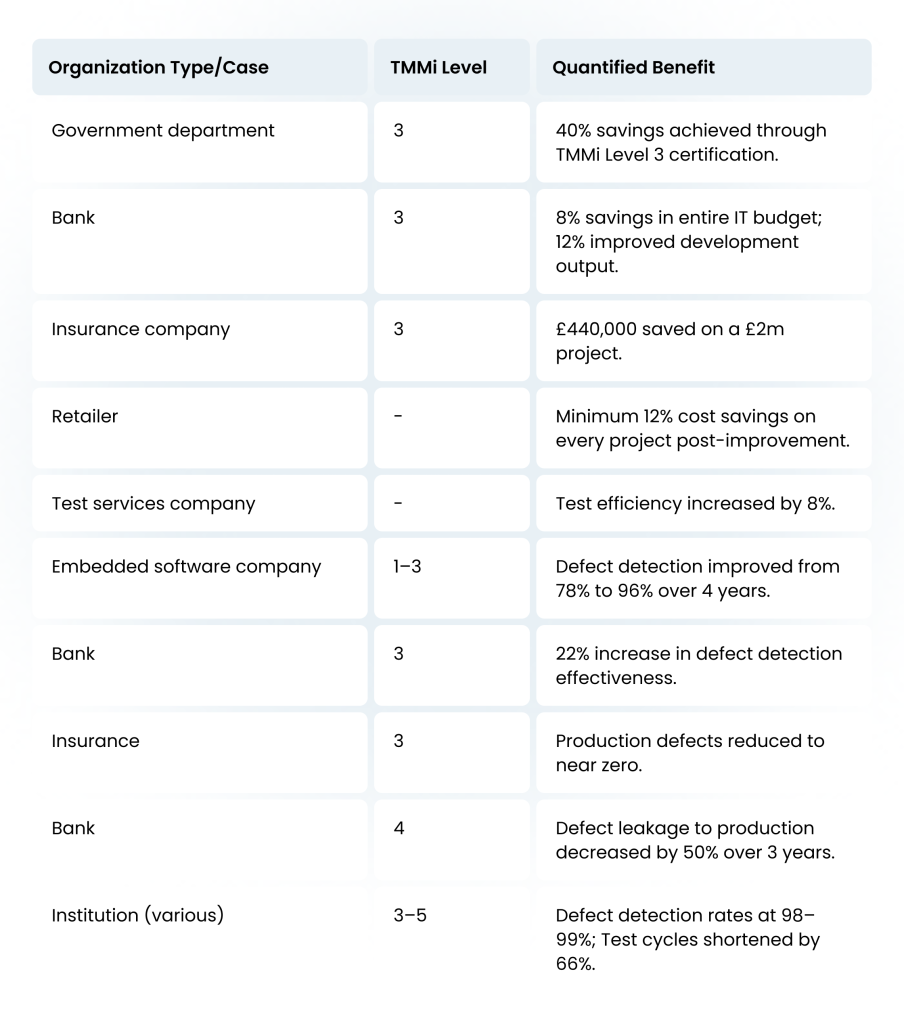

Don’t trust our words, trust TMMi and industry confirmed quantifiable data. Here are some numbers related to the impact of testing maturity models being implemented and improved.

Learn how our TMMi-based audit eliminated fragmented QA processes and created scalable testing workflows for a drone inspection leader

Quantified impact: Survey and case study data

Organizations worldwide have implemented TMMi and measured the results. Here’s what the data shows about real-world impact across different industries and maturity levels.

Sources used: TMMi Foundation, “Benefits Delivered“; “Worldwide user-survey reports.”

TMMi benefits for various industries

Beyond survey percentages, specific organizations have documented measurable improvements from TMMi implementation. These case studies show actual cost savings, efficiency gains, and quality improvements across different industries and maturity levels.

Additional metrics reported

- Test estimation accuracy improved by 60%.

- Test execution lead time reduced from 19 to 5 weeks.

- Defect leakage to production dropped from 10% to 5%.

- Test automation tools consolidated, e.g., from 6 to 4.

- Accelerated time to market by over 7% in some cases.

- Regression test cycle time cut from 4 hours to 30 minutes.

Sources used: TMMi Foundation, “Benefits Delivered“, “Costs and Benefits of the TMMi – Results of the 2nd TMMi World-Wide User Survey”, Science Publishing Group “Test Maturity in the Financial Domain“.

Test Maturity Models (TMM, TMMi) Explained Simply

Your team likely has some automated tests, possibly a few manual testing steps, and everyone performs tasks slightly differently. When asked “how good is our testing?” the answer is usually “pretty good” or “we’re working on it.”

TMMi gives you a way to actually answer that question with specifics. It’s a framework that defines what mature testing looks like at different stages, so you can figure out where you are and what to work on next.

Summing it up: Instead of guessing whether your testing is effective, you get clear criteria to measure against.

Purpose of the TMMi model in software testing

Every team believes their testing is adequate until production breaks. TMMi provides objective criteria to assess your current testing process against proven standards.

It’s based on the Capability Maturity Model but focused specifically on software testing processes within organizations. Instead of generic process improvement, TMMi addresses testing-specific challenges.

The model helps you:

- Identify exact areas for improvement in your testing approach

- Benchmark your testing capability against industry standards

- Prioritize which testing improvements will have the biggest impact

- Track testing process maturity improvements over time

TMMi provides a roadmap for systematic test process improvement rather than random tool adoption or hiring more QA people.

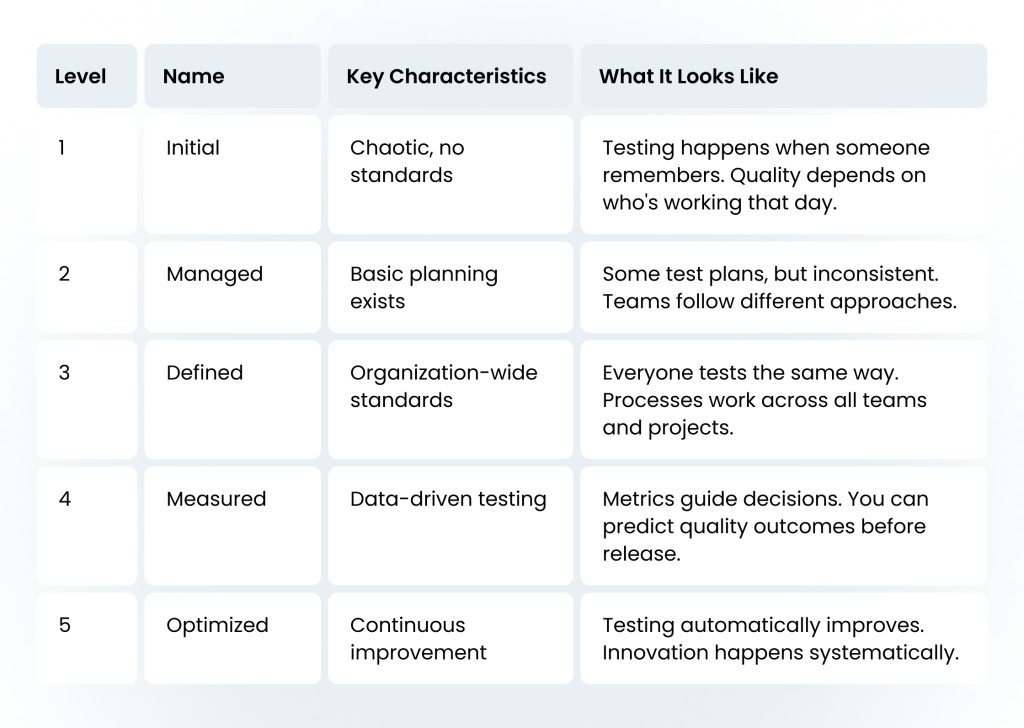

The 5 levels of TMMi — Quick reference table

How TMMi extends beyond the Capability Maturity Model (CMM)

The original Capability Maturity Model covers all software processes. Testing maturity model in software testing drills down into testing-specific challenges that generic process frameworks miss.

Key extensions TMMi adds:

Testing-specific process areas. CMM talks about “process improvement.” TMMi defines exactly what mature testing processes look like in practice.

Test planning integration. CMM doesn’t address how testing integrates with development workflows. TMMi shows how testing fits into every phase of the software development lifecycle.

Quality measurement frameworks. CMM focuses on process compliance. TMMi focuses on actual quality outcomes and testing effectiveness.

Risk-based approaches. TMMi includes specific guidance for risk assessment and mitigation through testing – something generic CMM doesn’t cover.

The difference matters. You can have CMM Level 3 processes and still ship broken software if your testing processes are immature.

TMMi ensures that process maturity translates into actual software quality, not just better documentation.

How to Identify Your Team’s TMMi Level

Testing maturity isn’t subjective. You can measure it with specific indicators that reveal how your organization actually works.

Indicator #1. Process consistency. Do all teams follow the same testing approach? Check if your mobile team tests differently from your backend team. If they do, you’re at Level 1 or 2.

Indicator #2. Integration timing. When does testing start in your development cycle? If it begins after the code is written, you’re immature. Mature teams write tests during or before coding.

Indicator #3. Automation coverage. Not just the percentage of automated tests. Look at what types of testing are automated. If you’re still manually testing login flows and basic CRUD operations, that’s low maturity.

Indicator #4. Defect discovery timing. Track where bugs are found. Level 1 teams find most defects in production. Level 4 teams find them during development or in automated pipelines.

Indicator #5. Release predictability. Can you accurately estimate release dates 4 weeks out? Mature testing makes releases predictable because quality gates are consistent.

Indicator #6. Change impact assessment. When requirements change, how long does it take to understand testing implications? Mature teams know immediately which tests need updates.

Indicator #7. Test data management. Do teams spend time creating test data for every test run? Mature organizations have systematic test data strategies that support consistent testing.

Here’s a quick assessment: If your team can’t deploy on Fridays because testing takes too long or is too risky, you’re probably at Level 2 or below.

Levels of Test Maturity: Observable Signs

The journey to testing excellence follows a structured framework with five maturity levels.

Identifying your current testing maturity level requires looking at specific behaviors and outcomes in your organization. Each level has distinct observable signs that show how systematically your teams approach testing and quality control.

Level 1: Initial (Ad Hoc Testing)

Testing happens when someone remembers or when something breaks. No systematic approach exists.

Observable signs:

- “We don’t know what was tested” – No systematic tracking of test coverage;

- Testing occurs without documented processes or standards;

- Defect trends show reactive patterns with late discovery;

- Limited tool usage beyond basic bug tracking;

- No traceability between requirements and test cases;

- Project costs are unpredictable due to uncontrolled testing phases;

- Testing team operates with minimal agile mindset integration.

What you’ll experience:

- Inconsistent test execution across different phases of the software life cycle;

- High variability in product quality between releases;

- Successful test outcomes depend heavily on individual expertise rather than process.

Level 2: Managed (Basic test management)

Basic test planning and documentation emerge, but practices vary between projects and teams.

Observable signs:

- Test case reuse rate begins to emerge as teams document basic test procedures;

- Introduction of fundamental testing policies and basic documentation;

- Testing activities start to follow the test management lifecycle;

- Initial establishment of test planning processes;

- Aspects of software testing become more visible to management.

What you’ll see:

- Basic test planning and monitoring practices;

- Initial metrics collection for test execution;

- Improved communication between development and testing teams;

- Foundation for efficient testing practices begins to form.

Level 3: Defined (Standardized testing)

Organization-wide testing standards exist. All teams follow the same documented processes consistently.

Observable signs:

- TMM provides a standardized approach across all projects;

- Comprehensive traceability from requirements through test execution;

- Defect containment efficiency metrics show measurable improvement;

- Established test design and implementation standards;

- TMM helps organizations achieve consistent testing practices across teams.

What you’ll see:

- Standardized test processes across the software industry context;

- Test case reuse rate significantly increases through systematic cataloging;

- Documentation becomes comprehensive and consistently maintained;

- Integration testing becomes a defined process rather than ad hoc activity.

See how 6 years of systematic testing maturity development delivered 92% automation coverage and 37% fewer support tickets

Level 4: Measured (Quantitative management)

Testing decisions are driven by data and metrics. Quality outcomes become predictable through statistical control.

Observable signs:

- Automation ROI tied to coverage goals with quantifiable metrics;

- Defect trends analysis drives predictive quality measures;

- Advanced tool usage for continuous monitoring and measurement;

- Statistical process control applied to testing activities;

- Model for assessing testing effectiveness through quantitative data.

What you’ll see:

- Metrics That Align With TMMi Process Areas are actively tracked and analyzed;

- Predictable project costs through quantitative testing models;

- Data-driven decisions for improvement in testing processes;

- Quality of testing measured through statistical methods.

Level 5: Optimizing (Continuous Improvement)

Testing processes continuously evolve and improve automatically. Innovation happens systematically across the organization.

Observable signs:

- “Every risk has coverage” – Comprehensive risk-based testing approach;

- Testing excellence achieved through continuous process optimization;

- Defect containment efficiency reaches industry-leading levels;

- Advanced automation ROI tied to coverage goals with continuous optimization;

- Innovation in software testing practices drives industry advancement.

What you’ll see:

- Continuous improvement in testing through data-driven optimization;

- Maturity of software testing processes enables predictive quality outcomes;

- Testing industry leadership through innovative practices;

- Successful test outcomes are predictable and consistently achieved.

Most organizations can realistically target Level 3 within 12-18 months with focused effort. Level 4 requires another 6-12 months of measurement-driven improvement. Level 5 is ongoing optimization that never really ends.

Knowing your current level is just the starting point. The real value comes from tracking your progress with specific metrics that prove you’re actually improving, not just following new procedures.

Metrics That Align With TMMi Process Areas

Measuring testing maturity requires specific, quantifiable indicators. These core efficiency metrics provide clear benchmarks for each TMMi level and show direct business impact.

TMMi Core Efficiently Metrics

| Metric | Level 2 | Level 3 | Level 4 | Level 5 | Business impact |

| Test Case Reuse Rate (Reused Test Cases / Total Test Cases) × 100 | 20-30% | 40-60% | 60-80% | 80%+ | Directly reduces project costs and accelerates time-to-market |

| Defect containment efficiency (Defects Found in Phase / Total Defects) × 100 | 60-70% in testing phases | 75-85% in testing phases | 85-95% in testing phases | 95%+ in testing phases | Improves product quality and reduces post-release support costs |

| Automation ROI Tied to Coverage Goals (Cost Savings from Automation – Automation Investment) / Automation Investment × 100 | — | 150-200% ROI with 60%+ coverage | 200-300% ROI with 80%+ coverage | 300%+ ROI with 90%+ coverage | ROI calculations must include coverage achievement metrics |

Advanced test maturity indicators

Higher maturity levels require more sophisticated measurement approaches. These advanced indicators help Level 4-5 organizations track systematic improvement and organizational testing capability.

| Risk Coverage Completeness | Measures progression toward “every risk has coverage”Integration with base model risk assessment frameworks | (Covered Risk Scenarios / Identified Risk Scenarios) × 100 |

| Process Adherence Score | Tracks how consistently teams follow test management processesMeasured across all phases of the software life cycleIndicates maturity of software testing processes | Systematic process compliance tracking |

| Test Environment Stability | Measures reliability and consistency of test environmentsTracks downtime, configuration drift, and setup failuresCritical for predictable testing outcomes | (Successful Test Runs / Total Test Attempts) × 100 |

| Mean Time to Quality Gate | Average time from code commit to quality gate completionIndicates testing process efficiency and automation maturityEnables predictable delivery timelines | Time tracking across CI/CD pipeline stages |

| Quality Debt Ratio | Balance between known quality issues and new developmentShows organizational commitment to technical qualityPrevents accumulation of testing technical debt | (Outstanding Quality Issues / Total Features) × 100 |

| Cross-Team Knowledge Transfer Rate | How effectively testing knowledge spreads across teamsMeasures testing practice standardization successReduces dependency on individual expertise | Knowledge sharing sessions and practice adoption tracking |

Most organizations collect testing data but never use it to make decisions. The difference between mature and immature teams isn’t the sophistication of their metrics — it’s how systematically they use measurement to improve their processes.

Start with the core efficiency metrics that match your current level. Add advanced indicators only when you have the foundation to act on what they reveal. Measuring everything without the capability to respond just creates reporting overhead, not maturity.

Simple Test Maturity Self-Check (Pre-Audit)

Use this checklist to identify your current position on the five maturity levels spectrum and flag opportunities to improve your testing practices.

Level 1-2 Indicators (foundation building)

Testing documentation

- Test cases are documented but not systematically organized

- Basic test plans exist but lack comprehensive traceability

- Tool usage is limited to basic test management tools

Process consistency

- Testing approaches vary significantly between projects

- Limited improvement in testing practices between releases

- Defect trends are tracked but not systematically analyzed

Team capabilities

- Testing team knowledge varies widely across individuals

- Agile mindset adoption is inconsistent across testing activities

- Software testing practices depend heavily on individual expertise

Level 2-3 Indicators (standardization phase)

Standardized processes

- TMM helps guide testing activities across most projects

- Test case reuse rate is measured and shows improvement trends

- Documentation standards are established and generally followed

Coverage and quality

- Aspects of software testing are systematically addressed

- Product quality metrics show consistent improvement

- Testing activities are integrated into all phases of the software life cycle

Measurement beginning

- Basic metrics are collected and reviewed regularly

- Defect containment efficiency is measured and improving

- Project costs for testing are more predictable

Level 3-4 indicators (quantitative management)

Advanced metrics

- Automation ROI tied to coverage goals is actively measured

- Defect trends analysis drives proactive quality decisions

- Model for assessing testing effectiveness is implemented

Optimization focus

- Efficient testing practices are continuously refined

- Quality of testing is measured through multiple quantitative indicators

- TMM provides a foundation for advanced optimization efforts

Self-assessment scoring

Count your checked items in each category:

- Foundation Building (Level 1-2): ___/9 items

- Standardization (Level 2-3): ___/9 items

- Quantitative Management (Level 3-4): ___/6 items

Interpretation guide for TMMi checklist

If most checks are in Foundation Building: Your organization is positioned at Level 1-2. Focus on establishing basic testing processes and building systematic approaches to improve the testing foundation.

If most checks are in Standardization: Your organization is positioned at Level 2-3. Concentrate on standardizing software testing practices and achieving consistent improvement in testing across all projects.

If most checks are in Quantitative Management: Your organization is positioned at Level 3-4. Your focus should be on leveraging data-driven approaches to achieve testing excellence and optimize enhancement of software quality.

From One-Time QA Audits to Sustainable Maturity Work

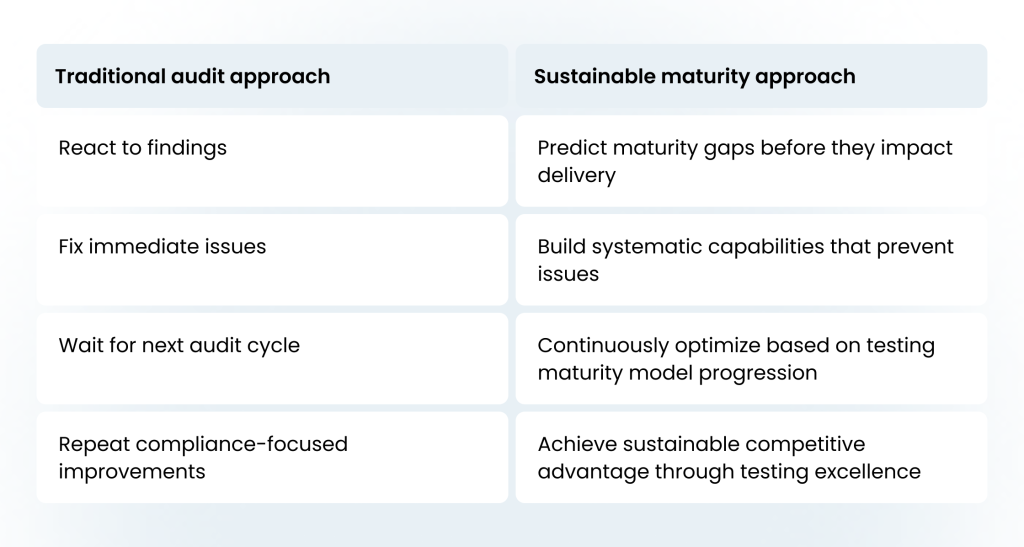

Most organizations treat QA audits as isolated events—periodic health checks that generate reports, action items, and temporary improvements. However, the testing maturity model in software testing reveals a fundamental flaw in this approach: maturity isn’t achieved through snapshots, but through sustained, systematic evolution.

Traditional one-time audits generate comprehensive findings that overwhelm teams, create compliance-focused improvements rather than capability building, lack continuity between assessment cycles, and miss the interconnected nature of testing process maturity. The result is a cycle of reactive fixes that never build lasting organizational capability.

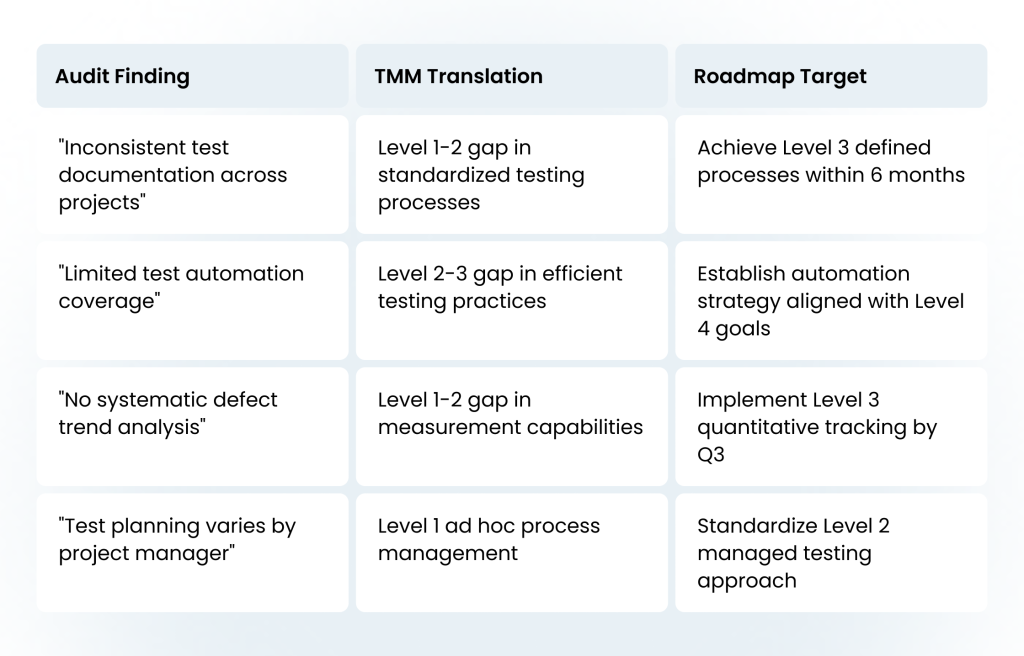

Using audit insights to start your TMMi-based roadmap

Your audit findings aren’t worthless — they’re just being used wrong.

Instead of treating audit results as a list of problems to fix, use them as baseline data for systematic maturity improvement. The key is translating individual findings into capability gaps and building improvement streams that address root causes, not symptoms.

Step 1: Map audit findings to TMM levels

Convert your audit results into test maturity model positioning using this translation approach:

Step 2: Build capability-focused improvement streams

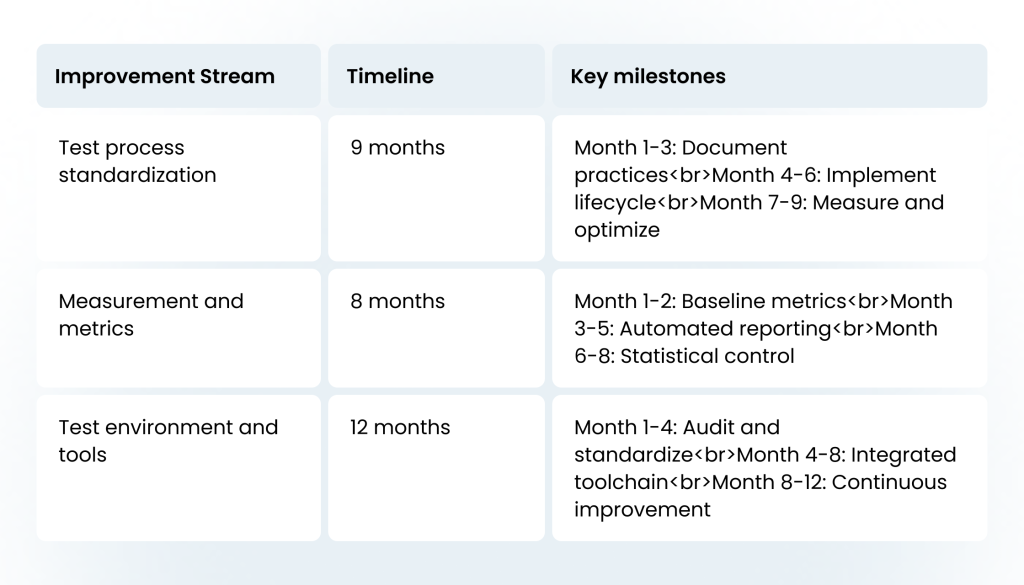

Instead of fixing individual findings, create integrated improvement streams based on TMM process areas:

Step 3: Establish continuous assessment cycles

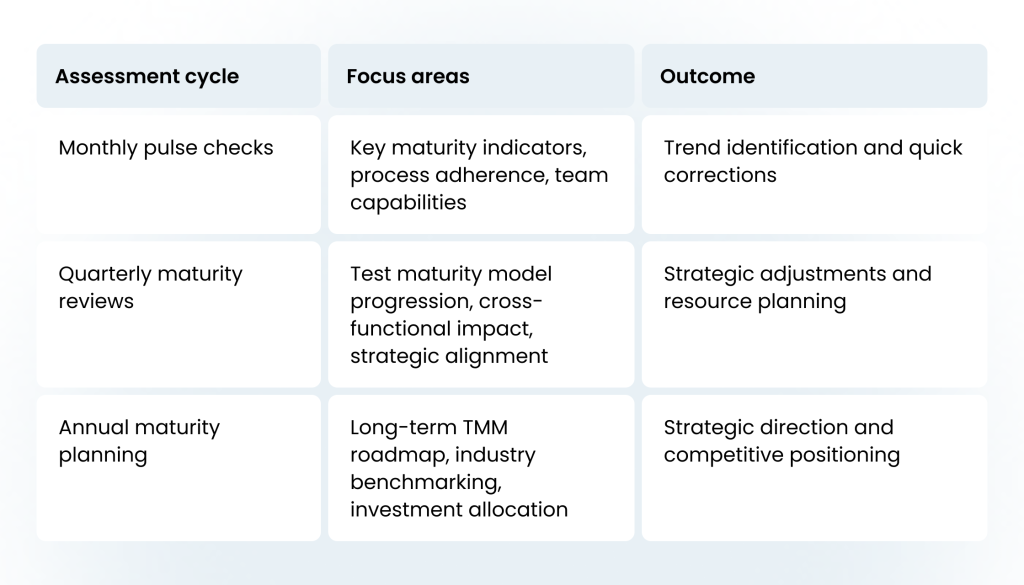

Replace annual audits with systematic testing maturity model progression tracking:

Sustainable testing maturity framework

The testing maturity model in software testing becomes your continuous improvement engine rather than a periodic assessment tool. This approach integrates maturity development into daily operations through leadership engagement via monthly dashboards and quarterly business impact reviews, team empowerment through self-assessment tools and recognition systems, and process integration by embedding maturity checks in project gateways and technology decisions.

Cultural integration ensures that TMM principles become part of how the organization naturally operates, rather than an additional compliance burden.

From reactive to predictive

The bottom line: Sustainable testing maturity isn’t about passing the next audit—it’s about building organizational capabilities that make audits unnecessary. The testing maturity model in software testing provides the roadmap; your audit insights provide the starting point.

Ready to move from reactive bug-fixing to proactive quality control?

Our QA Audit helps teams achieve Level 3-4 maturity with proven frameworks and measurable results.

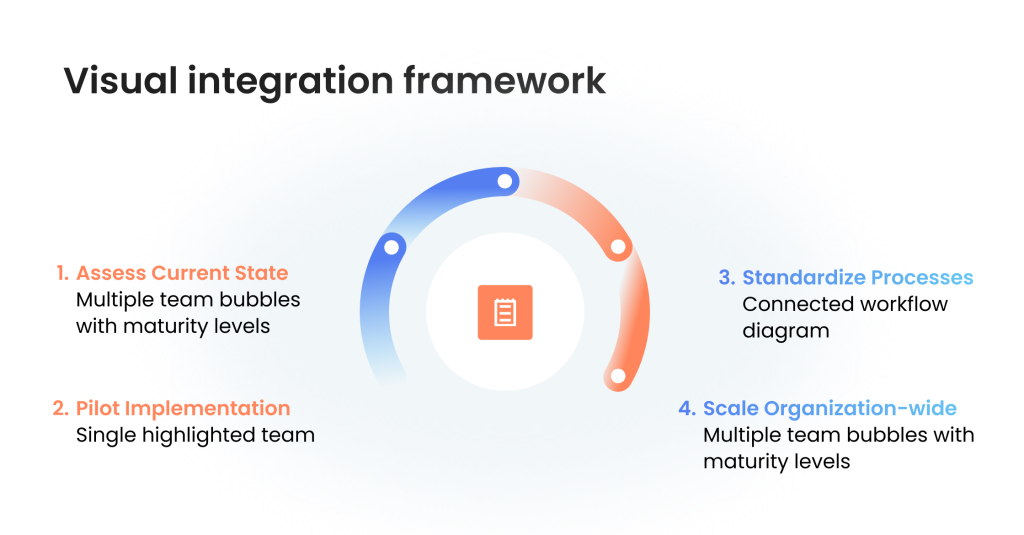

Building a Test Maturity Model Integration Strategy

Real integration means dealing with team resistance, skill gaps, and budget limits. You need a strategy that works with your current setup.

Here’s how to make it happen without breaking your delivery schedule.

Start with assessment, not tools.

Most organizations jump straight to buying testing tools or hiring consultants. This wastes money and creates more chaos.

Begin by mapping your current testing processes across all teams. Document what each team actually does, not what they’re supposed to do according to policy.

Pick one team to prove the model.

Don’t try to transform your entire organization at once. Choose your most motivated team or the one with the biggest testing pain points.

Implement TMMi practices with this single team first. Measure the results. Use their success to convince skeptical teams later.

Focus on process standardization before automation.

You can’t automate chaos effectively. Teams at Level 1 or 2 need consistent testing practices before they need better tools.

Establish standard test planning, execution, and reporting processes. Make sure everyone follows the same approach for similar testing objectives.

Build measurement into every improvement.

Track specific metrics that matter to your business goals:

- Time from code commit to deployment;

- Defect escape rate to production;

- Testing cycle time per feature;

- Test coverage for critical business flows.

Without measurement, you can’t prove that increased test productivity is actually happening.

Create cross-team testing standards.

Individual teams can reach Level 3, but organizational maturity requires consistency across all software testing processes within your company.

Develop shared testing guidelines that work for different technology stacks. Your mobile team and backend team should follow compatible testing methodologies.

Plan for resistance and skill gaps.

Some developers will resist writing tests. Some QA engineers won’t adapt to automated testing approaches.

Budget for training and potentially replacing team members who can’t evolve with your mature testing process. This isn’t optional—it’s part of the real cost of improvement.

Timeline expectations that actually work

Most organizations underestimate the time needed for testing process maturity improvement.

Level 1 to Level 3: 12-18 months for motivated teams Level 3 to Level 4: 6-12 additional months with proper measurement systems Level 4 to Level 5: Ongoing optimization, no fixed timeline

Warning: Common integration failures

These approaches always fail:

- Implementing TMMi as a checkbox compliance exercise;

- Buying expensive tools before establishing basic processes;

- Trying to change every team simultaneously;

- Focusing on certification instead of practical improvement.

Success indicators to track

You’ll know your integration strategy is working when:

- Teams stop asking for testing deadline extensions;

- Hotfixes become rare instead of routine;

- New team members can follow your testing approach without extensive training;

- Your testing capability scales with your development velocity.

Test Maturity In Agile and Continuous Testing Environments

Mature QA isn’t slower — it’s how agile teams go faster. Immature testing breaks under sprint pressure. You get rushed regression testing, late-discovered bugs, and unclear ownership when things break. Your “done” releases need hotfixes within days.

A mature testing process supports rapid iterations with fewer surprises. The testing process is managed as part of sprint planning, not as an afterthought.

How TMMi aligns with Agile and CI/CD

TMMi emphasizes process discipline, not documentation overload. It’s not anti-agile.

Here’s what it provides:

- Consistent test strategy across every sprint and epic

- Sustainable automation that doesn’t break every release

- QA integrated into planning, not just post-delivery validation

Every TMM level builds on the capability maturity model focused on software development realities. Level 3 and above teams embed testing into every phase of the software development cycle.

From ad hoc checks to continuous quality feedback

Testing maturity in agile means tight feedback loops throughout the software life cycle.

Your test coverage ties directly to user stories. Defects get analyzed and closed within the same sprint. Automation validates builds in hours, not days.

This continuous process improvement becomes automatic. Teams don’t need separate “testing phases” because testing has become integrated into the software development workflow.

Warning signs of low maturity in fast-release teams

You’ll recognize immature testing processes within your organization’s testing approach:

- Frequent hotfixes after releases marked “done”;

- Automation constantly breaking due to unclear ownership;

- “We’ll test it later” mindset during sprint planning;

- Overloaded regression cycles that delay every release.

These patterns indicate your current testing process can’t handle agile velocity.

What high-maturity QA enables in agile

Mature testing processes within agile environments create reusable systems:

Test cases work across sprints and epics without constant rewriting. Risk-based prioritization ties testing efforts directly to business goals and delivery objectives.

QA gets involved in story grooming and planning, not just execution. They identify testing objectives during requirements, not after coding.

Continuous testing becomes part of your CI/CD pipeline architecture. It’s not bolted on as a separate step that slows deployments.

The result: increased test productivity that scales with your development velocity, not against it.

FAQ — Testing Maturity and TMMi

Can we be at different TMMi levels in different teams?

Absolutely. Your mobile team might operate at Level 3 while your backend team is stuck at Level 1.

This happens when teams implement their own testing practices without organization-wide standards. One team gets automation right, another team still does everything manually.

But here’s the problem: you can’t claim organizational maturity if only some teams are mature. Real process maturity means consistent testing capability across your entire software development lifecycle.

What’s the difference between TMMi and test automation maturity?

TMMi covers your entire testing process maturity. Automation is just one piece.

You can have 90% test automation and still be at TMMi Level 2 if your testing methods are inconsistent or your current testing process lacks strategic planning.

Test automation maturity only measures tooling and coverage. TMMi measures whether your testing efforts actually improve software quality systematically.

Is TMMi only for enterprise-level QA teams?

No. The testing maturity model works for any size organization.

Startups can implement Level 3 testing practices with a team of three. Mid-sized companies often need TMMi frameworks to scale their testing capability without adding headcount.

Even if you’re working with outsourcing vendors, TMMi helps identify areas for improvement and benchmark their testing quality against your standards.

We’ve already invested in testing tools. Isn’t that maturity?

Tools alone don’t create maturity. We’ve seen companies spend $200k on testing platforms and still ship broken software.

Higher maturity requires defined processes for how teams use those tools. Without clear testing phases and measurement criteria, expensive tools just create expensive chaos.

Your current testing process determines tool effectiveness, not the other way around.

How often should we reassess our test maturity?

Reassess annually or after major changes to your software development approach.

Testing process maturity shifts when you change frameworks, add new product lines, or restructure teams. A software release that causes major production issues is also a good trigger for reassessment.

Think of maturity assessment as continuous improvement, not a one-time audit. Your testing methodologies should evolve with your business needs.

Wrapping Up: Testing Maturity Strategy and How to Implement It

The Testing Maturity Model gives you a clear path from reactive testing chaos to proactive quality control. Five levels, measurable criteria, and practical steps that work whether you’re a three-person startup or enterprise team.

Time investment is significant. Getting from Level 1 to Level 3 takes 12-18 months for motivated teams. Level 4 requires another 6-12 months with proper measurement systems. Level 5 is ongoing optimization with no fixed timeline.

Not every team needs Level 5. Many organizations function perfectly well at Level 3 or 4. The key is matching your testing maturity to your business requirements, not chasing the highest level for its own sake.

Integration with agile works, but requires discipline. Mature testing processes support faster development, they don’t slow it down. Your testing strategy becomes part of sprint planning, not an afterthought.

The biggest mistake is trying to transform your entire organization at once. Pick one motivated team, prove the model works, then scale from there.

In this article, we covered:

- How to assess your current testing maturity level honestly;

- The five TMM levels with specific indicators for each stage;

- Metrics that actually matter for business impact;

- Integration strategies that work with existing workflows;

- Common pitfalls that derail testing maturity initiatives.

If you’re ready to move from reactive testing to systematic quality control, we can help. Our team has guided organizations through complete testing maturity transformations — from initial assessment to Level 4 implementation.

We know what works, what fails, and how to make the transition without disrupting your current delivery schedule.

Jump to section

Hand over your project to the pros.

Let’s talk about how we can give your project the push it needs to succeed!