Automation testing is one of those technical fields where you never stop learning. Whether you’ve been doing it for a few months, a couple of years, or over a decade, there is always something new and exciting to find out. This is why this roundtable is a must-read for anyone working with automation testing or even considering it.

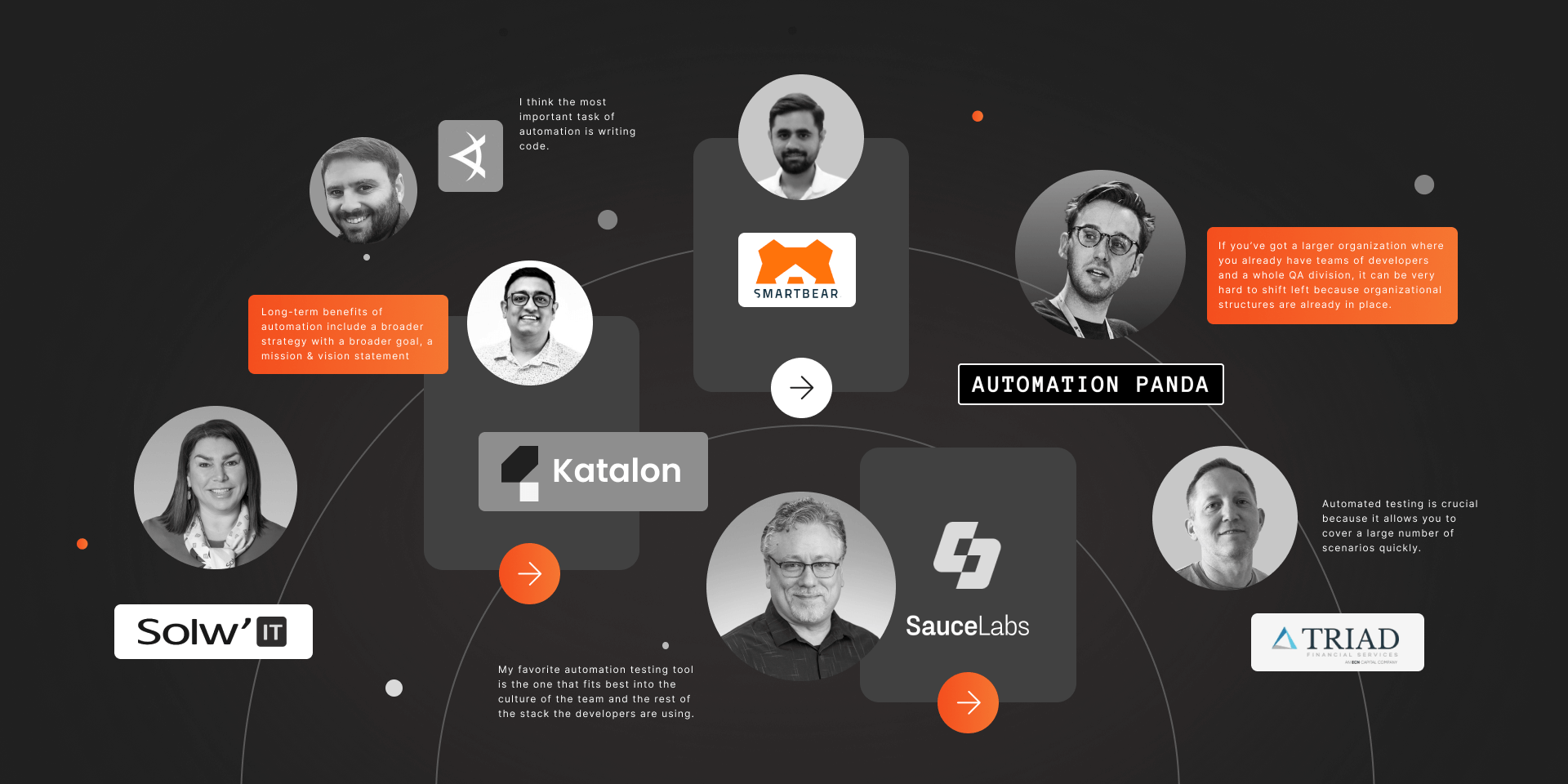

To get a comprehensive idea about the state of the automation industry and how to be good at automation testing, we’ve talked to some of the finest QA minds. Here are the industry leaders we interviewed for this article:

- Jesse Friess, Director of Software Engineering & Administration, Triad Financial Services

- Gokul Sridharan, Director of Solution Engineering, Katalon

- Oksana Wojtkiewicz, Head of Sales and Marketing, SOLWIT SA

- Nitish Bhardwaj, Senior Product Manager, SmartBear

- Marcus Merrell, Vice President of Technology Strategy, Sauce Labs

- Yarden Ingber, Head of Quality, Applitools

- Andrew Knight, Software Quality Advocate, AutomationPanda.com

And this is what we learned from them.

1. Automation testing: Why it’s important and what’s a good strategy for automating testing?

How to know when you need to automate a testing project and how to approach it in the most sensible way? Our experts know exactly how!

1. What are the short-term and long-term business benefits of automating testing?

Jesse Friess: Automated testing is crucial because it allows you to cover a large number of scenarios quickly. However, it is not the be-all and end-all of QA. There still needs to be unit testing done by the developer. This ensures the quality of the code at a micro level, while automation covers the macro.

Gokul Sridrahan: Some of the short-term benefits of automation testing I’ve noted include upgrading skills within the team, making regression testing more repetitive, getting the ability to do different types of testing, and better use of the application inventory. Long-term benefits of automation include a broader strategy with a broader goal, a mission & vision statement on what to accomplish using automation, better planning for your finances as an organization, more variety in choosing the technology or tool to complete your goal, and better accountability across the organization.

Oksana Wojtkiewicz: Business benefits can only be weighed by considering automation, technology, and tools used in creating the product. In some cases, we can find out the results of tests even within a few hours using commercial automation tools. In the case of more complex test items and the multitude of automation tools or customized frameworks used, this time will significantly expand, even up to 2-3 months. Despite this, it is worth noting that this is not time wasted for business. The time saved by automated testing can be used to perform other, more complex tasks. The most substantial long-term benefits include quick feedback on the quality of an application, the ability to test more often, and releasing manual testers’ time & resources.

2. Is testing automation required for every software project, or are there situations where it’s not needed?

Jesse Friess: Automation is not always needed. For instance, if you purchase a COTS (Commercial off-the-shelf) solution, then there is no need to spend on testing. If you’re developing your own solutions in-house, then there obviously needs to be testing before you roll it out.

Gokul Sridrahan: In my experience, there are specific application candidates that you can choose for automation. There are some really complex applications with multiple layers of elements and objects where you will spend a ton of time creating the script in the first place. Script creation time & time to value are two important things to think about when considering automation. Other candidates include legacy applications written in legacy programming languages. The benefits of automating these applications outweigh the cost.

Oksana Wojtkiewicz: Automation is not always required, and there is no business case for doing so in many cases. This is particularly true for short-term projects, where automation can take longer than product development. Another example would include strictly hardware-related projects requiring manual actions, e.g., replacing a chip, rewiring expansion cards, etc. In this situation, the cost of setting up automated testing equipment may be compared to the benefits. A project focused only on the graphical part of the application (UI), which changes frequently, would also be an example.

3. Is there some criteria, maturity or otherwise, a company should meet to be able to take advantage of automation?

Gokul Sridrahan: In my experience, maturity occurs when scale occurs. In general, you want to start automating when a few things happen: your application under test is extensive & it takes a lot of time to get test coverage; you have a large team, but an ineffective usage of your resources has led you to believe that automation is a necessity; you have multiple applications & only one team to manage it all; you have multiple teams using multiple technologies.

4. Do we always need an automation testing strategy, or can we do without it?

Gokul Sridrahan: Smaller teams may not need the formality of building a complete end-to-end automation strategy and probably don’t require a strategy with an end game in mind. They have the luxury of experimenting. Any company with seriousness & attention towards user experience should really pay attention to the same & have an end goal — what success means to them.

Oksana Wojtkiewicz: The automation strategy should include, among other things:

- The definition of the scope of automation and the level of testing

- The definition of the framework and tools for automation

- Identification of test environments

- Creation of the tests themselves and running them

It seems impossible to complete automation by skipping any of the above steps. It is worthwhile to keep in mind that not having a strategy can also be a strategy.

5. What features should companies consider when designing an automation testing strategy?

Gokul Sridrahan: These are the questions I suggest answering when creating a strategy:

- What’s the test coverage?

- How to ensure edge cases are covered?

- What types of applications can be tested?

- How frequently to test & how long does it take?

- How complex is it to build?

- How can it be maintained & built upon?

- How can it be effective long-term?

- How can you drive an effective process?

- How to increase accountability & visibility across the organization & show why automation is much more important?

- If one approach doesn’t work, what’s the alternative, and how to evolve as tech evolves?

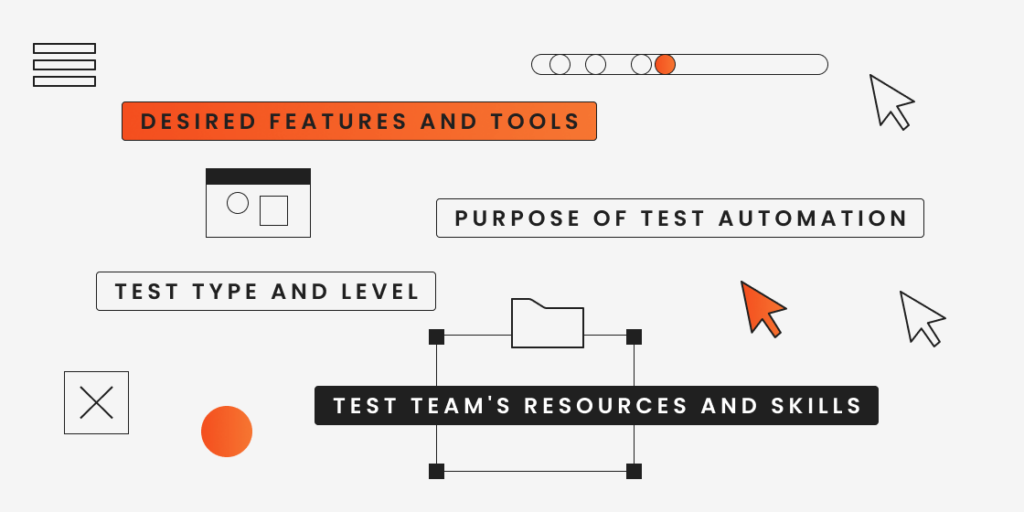

Oksana Wojtkiewicz: These features are due to the steps included in the strategy:

- Test type and level

- The test team’s resources and skills

- The desired features of the framework and automation tools

- The purpose of test automation

6. How do you know your automation testing strategy is actually good?

Gokul Sridrahan: I would suggest the team ask itself the following questions about an automation strategy:

- Is it scalable? Does it consider all the difficult parts of your organization & growth potential?

- Will it work? As your organization grows, is it true that a test framework will also grow along with your maturity?

- Did we consider the time to learn & time to evolve when considering an automation strategy?

Oksana Wojtkiewicz: A strategy is appropriate when it achieves the defined objectives with an acceptable ROI. In light of these two criteria, we can say without a doubt that the automation path chosen is the right one.

7. What are some best practices or trends you are witnessing in the software testing automation field?

Gokul Sridrahan: Some of the trends I’ve noticed lately in testing automation include:

- Incorporating AI into automation

- Analytics and insights are everything

- Applications are getting complex — even more so than 10 years ago

- Visual testing is much more than image comparison

- Running your tests on the cloud and scaling multiple parallels

- DDT and BDD are still popular

Oksana Wojtkiewicz: The context of recent trends suggests that many tools are adopting AI and taking a codeless approach to capture the market. It would also be worth mentioning the Playwright framework (open source), something the testing community has been hearing more and more about.

2. Automation testing team: Who’s in charge of the operation?

A quick guide to building an automated testing team and helping it achieve maximum efficiency through management and communication.

1. Should there be a separate automation testing team, or does it need to operate as part of the general QA department?

Andrew Knight: It really depends on the organization’s needs and who they expect to develop the test automation. For example, if they are developing a web application and it’s a small startup with only developers, it probably doesn’t make sense for them to just bring in four or five QA engineers into the company rather than really bake it into their development process. On the other hand, if you’ve got a larger organization where you already have teams of developers and a whole QA division, it can be very hard to shift left because organizational structures are already in place. This may be the case where you need to work with a QA organization and shift left from there.

Yarden Ingber: Depends on the product and the organization. What I want to see in an automation team is a team of developers. I think the most important task of automation is writing code. And in order to write code, you need to be a developer. So, I think a team of automation developers should be software developers.

Nitish Bhardwaj: In my opinion, there should be a dedicated automation testing team (or a smaller squad if working on a tight budget) that operates as part of the general QA department. While the QA team can also have some level of automation testing expertise, having a dedicated automation testing team ensures that automation testing is given the attention and resources it needs to be successful.

2. What are the typical roles in an ideal automation QA team? What are their responsibilities?

Nitish Bhardwaj: This depends on the size of the team, the budget for automation, and the maturity of the existing test automation system. If an organization is just starting automation — it should have at least one automation lead and a couple of automation engineers to start with. With bigger organizations or more mature setups, it will be a bigger team that has similar roles like a development team, an engineering manager, an architect, senior automation engineers, automation engineers, and operations engineers.

3. Which qualities are you looking for in candidates to fill each typical automation QA team role?

Yarden Ingber: If we are looking at automation developers as software developers, I would like to see software programming capabilities. I would test them as you would test a software developer. Another side I think is very important is the DevOps side. Also, I want to know if they have experience specifically with the product that I’m using. For example, testing mobile native apps is very different from testing web apps. So if I have a mobile native application, I could hire someone that worked in testing web applications, but it will take them much more time to get to the point.

Andrew Knight: If I’m specifically looking to hire an automation engineer or SDET, I want to make sure they can actually do the job of test automation because many people inflate their skills in this area. I look for people who actually have decent programming skills — that’s a bare minimum. Then I want to see that they actually worked on test automation projects somewhere. I need to know if they have done some sort of unit testing, API testing, and web UI testing. With entry-level specialists who don’t have a large portfolio in testing, I would gauge their interest and their aptitude for testing automation. Because some people want to get their foot in the door, I don’t want to hire someone who thinks of testing automation as a stepping stone.

Nitish Bhardwaj: For all the roles, it’s very important that they understand the role of a QA and test automation and they are passionate about test automation. One mistake many organizations make is hiring developers to write test automation frameworks and systems. These developers might have better technical knowledge and more coding skills, but their lack of empathy for QA and its importance doesn’t work for QA teams in the long term. For an Automation QA Lead, I would look for in-depth knowledge of automation testing tools and frameworks, experience developing and implementing automation testing strategies, and strong leadership and project management skills. For an Automation QA Engineer, I want to see good programming skills, experience developing automation testing scripts and frameworks, and an ability to troubleshoot issues and bugs with automation testing tools and scripts.

4. What is the smallest possible team size for an automation testing project?

Yarden Ingber: It really depends on the size of the company and the scale of the project. It also depends on the number of developers. So if you have fifteen software developers and one or two automation developers, the automation developers can become a bottleneck in the project pipeline. It’s very hard to decide on a perfect number, but there should be some balance between the number of developers and the number of automation developers. I would say it’s one QA for every five developers, but it can be more or less than that.

Andrew Knight: One automation engineer. It’s not ideal, but having at least one SDET or test automation engineer can add significant value. I know because that’s exactly what I did at my previous job. I was the first SDET they hired. And even though I was working alone for a long time, I was still able to add value. It’s not like we were aiming for 100% completion, but the tests I wrote added significant value, even if they only had a fraction of coverage, they were still catching bugs every time.

Nitish Bhardwaj: As I mentioned, it depends on the scope, complexity, and budget. However, if you have to start small, start with an automation QA lead. Do not make the mistake of hiring one or two QA engineers first and a lead later on. One QA lead with one or two QA automation engineers would be a great start.

5. Who do you think makes better automation QAs: people who switch from software development or from manual QA?

Yarden Ingber: I think a good strategy is “shift left”. The manual QAs can write the test cases manually. The developers will then write the code based on the cases that the manual QAs created. I think that’s a good starting point for small companies. And when you grow into bigger companies with more products and more teams that develop multiple projects at once, I think having a strong automation team that mostly consists of software developers can be a good approach for bigger companies that have multiple teams and would like to have a shared CI system for multiple development teams.

Andrew Knight: I would say that engineers who have a developer mindset are better at automation initially. As an automation QA, you create software that is meant to test other software. You need to have the same development skills like code reviews, reading other people’s code, and testing your own code. People with a development background already have that skill set, they are just applying it to a different domain. Whereas when you have engineers who are traditionally manual testers, they know how to write and execute good tests, but they don’t necessarily have those development-oriented skills.

6. Let’s say you are hired to build an automation process from scratch at an organization, and there is no concern about the budget. What is your ideal team setup?

Andrew Knight: My ideal setup is a small core team of SDETs who really have that developer mindset and background to approach a problem in testing automation and exist within a greater organization or company to empower other teams to have success with testing automation and quality. They would almost be like an internal consultancy team, the ones who are providing tools and recommendations, and coming alongside teams to help them get started with it. But I also believe that it’s the individual product teams that need to be responsible for their own quality. They should not be writing code, throwing it over the fence, and having somebody else take care of the software quality.

Take the quality of your solution to the next level with our automation testing services

7. How to effectively manage an automation QA team and who should do it?

Nitish Bhardwaj: The automation QA team should be managed by the automation QA Lead, who should provide guidance, support, and feedback to team members. It is essential to ensure that team members have the necessary resources and tools to perform their jobs effectively. Regular communication, team meetings, and performance reviews can help to ensure that the team is meeting its goals and objectives. The QA automation lead can report to the QA manager/director, an engineering manager, or a technology leader.

8. When you already have a team, how do you evaluate it? How do you know it’s efficient? Are there some metrics you can use?

Yarden Ingber: It can be challenging to track the success of an automation developer because they don’t develop the product itself, meaning you don’t have bug reports from the customers. Still, there are definitely some metrics you can use. For example, positive feedback from product developers — If they are happy with the test suite that the automation developers created — that’s a sign of a good automation developing team. I think the stability of the test suite is another very important metric because if the test suite is unstable, then the trust of the entire company in the test suite fails. At the same time, I don’t like the idea of testing or measuring the team by the number of tests created per week or the number of tests fixed, or similarly hard metrics. I would rather have softer metrics.

Andrew Knight: You essentially want to build a scorecard that indicates the health and maturity of the team in their testing journey. These metrics shouldn’t be punitive, but rather a way to reveal opportunities for areas of improvement. If you make the metrics punitive, then the whole thing is off. You’ve got to make sure that you message that appropriately. I believe metrics should reflect part of the story, but not the whole story. Some of the things I would look for when scoring a team would be things like:

- How long does it take you to develop a new test?

- Does the framework help you or hinder you from making progress?

- How long does it take to run a test suite —15 minutes, an hour, 20 hours?

- What is the amount of time for the feedback, from the developer pushing the change to getting the result?

9. Is it possible to run a distributed automation testing team? How to build efficient communication with a distributed/offshore team?

Yarden Ingber: All of my automation developers are outsourced and do not share an office with me. On the other hand, most of the software developers do share an office with me. What I see is that there is a very big advantage for people that work together. And you can see it in day-to-day actions, when I sit with someone to eat lunch. We talk about work and ideas can pop up. This is something you can hardly have if you just communicate through text or Zoom meetings. But we’re trying! It’s very important for me to open each meeting with at least 15-20 minutes of talking about everything except work. We talk about the weather in everyone’s country, personal matters, family stuff, traveling, and even how their weekend was. It can be complicated to have this personal communication when everyone is in different locations, so these personal discussions absolutely help.

Andrew Knight: I definitely enjoy working with an in-house team more, but we also successfully adjusted to the shift to remote work. Plus, working in tech, we were more prepared to go remote than many other industries. But there isn’t much I’m doing differently than with in-house teams. You do have to be more intentional with communication to make sure that you can build both personal and professional connections. I would love to be able to meet up in person regularly, but when that is not available, you can still make it work.

Nitish Bhardwaj: I think with remote working and effective collaboration tools, it’s not going to be a challenge. It is essential to establish clear communication channels and set expectations for communication and feedback. Additionally, it is important to ensure that the team members have the necessary resources and support to perform their jobs effectively, regardless of their location — for example, it’s vital to ensure equal access to the test environment from all locations, and take into account things like network lags.

10. What is team culture to you when we’re talking about automation testing?

Yarden Ingber: I think that both manual and automation developers are very dependent on the product developers. So both for manual and automation QAs, I value the ability to communicate well. They can explain their ideas and what is blocking the progress. When they have a request from the developers and are able to communicate their needs, the developers can quickly help. As for other aspects of team culture, I don’t think a company should be absolutely homogenous. Different people with different views and different personalities can benefit from one another, so differences should not be an issue.

Andrew Knight: I think there are certain things that people on a test automation team would understand that people from other teams would not, such as test automation diversity or different testing conferences and events. That’s part of the team culture. You also want a healthy team culture. You want people who are respectful of each other, optimistic, energetic, with a go-getter attitude, who love what they’re doing and enjoy working with people on the team, who are not afraid to give critical feedback but do so in a healthy manner. Those are the qualities I look for in a team.

3. Automation testing tools: Choosing the right one for the job

Find out how to pick the perfect tool and technology for your testing automation project.

1. What are your favorite automation testing tools and why?

Marcus Merrel: I don’t tend to choose tools until I understand what the project is and what they’ll be used for. I guess my answer is that my favorite automation testing tool is the one that fits best into the culture of the team and the rest of the stack the developers are using. The tools I’ve used the most often in my automation career have been some variations of Java/TestNG/Selenium, but I would never say that those are adequate for all testing purposes. It usually takes over a dozen different tools sprinkled throughout the SDLC to make automated testing happen.

2. Do you pick tools based on the project specifics, or do you have a universal go-to tool set?

Marcus Merrel: I think there’s no such thing as a universal go-to tool set. Even if you’re going from one eCommerce web app to another, the tool selection should reflect the team, the programming languages they prefer, the CI/CD product they’re using, and a bunch of other factors. I tend to gravitate toward projects that use Java, just because I’ve found that to be the best language for distributed, multinational teams of variable skill levels working on the same stack at the same time. But I understand that’s not the right answer for everyone, and that the market is rapidly shifting toward JavaScript, TypeScript, Rust, and other, newer languages and philosophies.

3. Who is involved in the process of selecting the tools at your organization?

Marcus Merrel: It’s generally left up to the teams, but in some cases, we don’t have many choices. Our teams are oriented towards microservices to provide APIs to internal and external customers. We have some flexibility, but Sauce Labs is a software company that’s fundamentally in the business of managing hardware. When you have several thousand iOS and Android devices in a lab, you’re somewhat limited to the choices offered by Apple and Google, not to mention all the low-level networking and USB cable-level code we have to deal with.

4. What are the features you are looking for in an ideal tool?

Marcus Merrel: Personally, I start by looking for open-source tools with a strong community. The community around an open-source project is a lot like the corporation behind a commercial product: a strong community means strong support and ongoing maintenance/feature work. A weak community is similar to what you get when you buy a small product from a large corporation. When choosing commercial tools, I rely on references and my network to help me understand the product’s roadmap, stability, support, etc. I also favor tools that do one thing and do it well. I’ve been involved with projects where people ask us to put some random features in, because “competitive projects have XYZ features, why don’t you?”. My preference is always to explain why they don’t actually want us to implement a poor version of XYZ, but to integrate with an amazing tool that specializes in XYZ. This way, we can continue to be experts in our thing, while they stay experts in theirs. I’m a big fan of specializing.

5. What other principles do you use in your decision-making process?

Marcus Merrel: I come from the open-source world, so I have a natural inclination toward open-source tooling. I like projects that have a rich ecosystem of commercial support that surround an open source product — this means I pay people to help me use a product that’s free, rather than paying some seat or consumption price for the software. There are pros and cons to this approach, and I’ve definitely bought my share of commercial software, but this is my initial approach.

6. Do the available automation tools fully meet your needs, or do you feel like the market is missing something?

Marcus Merrel: The market is absolutely missing some critical things, and as far as I can tell, nobody is even seriously trying to attain them. While the entire ecosystem of testing products is trying to automate the human tester out of a job, we’re not getting answers to simple questions like “What is the value of my automated tests?”, “How can I make my testing better, more effective, and faster?”, “What kind of testing am I missing?”. The analysis of test signals is only being considered by a couple of products, and it drives me a bit crazy because this is such a solvable problem. We have all this data but no idea how to analyze it. In my opinion, this is more valuable than an effort at low-code test automation.